Before you can build a powerful AI model, you need the right ingredients. Data sourcing is the methodical process of finding, gathering, and preparing the high-quality data your model needs to learn and perform accurately. Think of it as a chef meticulously selecting ingredients; the final dish is only as good as the raw materials you start with.

In the same way, your AI’s performance, accuracy, and real-world reliability hinge entirely on the data you feed it. Getting this first step right is the single most critical factor in achieving measurable business impact.

What Is Data Sourcing Explained Simply

Powerful AI is not born from complex code alone; it is built on a foundation of solid, relevant data. Data sourcing is that critical first step in any machine learning project, and it most directly determines whether your model succeeds or fails. It is far more than just downloading a file; it is a disciplined process of identifying and acquiring the raw materials your algorithm needs to become intelligent and effective.

A smart data sourcing strategy ensures the information you collect is accurate, relevant, and diverse enough to handle real-world scenarios. Without this thoughtful approach, teams often end up building models on skewed or incomplete data, which leads to poor performance, biased outcomes, and expensive rework.

The True Foundation of AI Success

The impact of high-quality data cannot be overstated. When an AI model trains on clean, well-structured information, it improves its ability to make sharp predictions, identify hidden patterns, and deliver tangible business value. This is why leading organizations invest heavily in mastering their data acquisition and preparation pipelines.

An effective sourcing strategy boils down to a few core principles. To give you a clearer picture, here is a quick-reference table summarizing the core components of any robust data sourcing strategy.

Quick Guide to Data Sourcing Fundamentals

| Component | Description | Impact on AI |

|---|---|---|

| Relevance | The data must directly address the problem you are solving. | Ensures the model learns the right patterns to achieve its goal. |

| Accuracy | Data should be correct, complete, and free from errors. | Prevents the AI from learning incorrect information, leading to reliable outputs. |

| Diversity | The dataset must represent the real-world population it will serve. | Minimizes algorithmic bias and ensures fair, equitable performance for all user groups. |

| Volume | Sufficient data is needed for the model to generalize effectively. | A larger, high-quality dataset helps the model become more robust and less prone to overfitting. |

Each of these components is a pillar supporting your AI project. If one is weak, the entire structure is at risk.

Sourcing the right data is the difference between an AI that delivers genuine business intelligence and one that just generates noise. It is the single most important investment in the machine learning lifecycle.

The process often involves blending data from multiple places to build rich and comprehensive AI training datasets. This mix gives the model a more nuanced and complete view of the world, much like how reading multiple books on a topic makes you more of an expert. Ultimately, a solid data sourcing plan is the blueprint for any AI system that hopes to be reliable, scalable, and fair.

Uncovering the Right Data Sources for Your AI

Not all data is created equal, and knowing where to look is the first hurdle in building a powerful AI model. The universe of available information is massive, but it breaks down into a few key categories that will shape your entire data sourcing strategy.

Getting these categories right means you can select the perfect mix of "ingredients" for your specific AI recipe. The most foundational starting point is determining what data you already have versus what data you need to find.

Internal vs. External Data

Internal data is the information your organization already creates and collects through its daily operations. Think of it as your proprietary goldmine, holding direct clues about your customers, internal processes, and performance. Since you control its collection, it is often more structured and directly relevant to your business challenges.

A few practical examples of high-value internal data include:

- Customer Relationship Management (CRM) data: Purchase histories, communication logs, and customer support tickets.

- Operational logs: Website clickstream data, server activity, and application performance metrics.

- Financial records: Transaction histories, sales figures, and inventory levels.

On the flip side, external data comes from outside your organization. This information provides the broader context your internal data lacks, such as market trends, competitor activities, and demographic shifts. You can acquire external data from public sources, specialized third-party vendors, or APIs.

By blending the detailed, specific knowledge from internal data with the broad context of external data, you create a dataset that is both deep and wide, giving your AI a more complete picture of reality.

This combination is where the magic happens. An e-commerce company, for instance, could use its internal sales data to see what products are selling. It could then layer in external demographic data to understand who is buying them and why. This approach creates a far more robust foundation for any predictive model.

Primary vs. Secondary Data

Beyond where data originates, you also must consider how it was collected. This distinction is crucial for judging its relevance and identifying potential limitations.

Primary data is information you gather yourself for a specific purpose. It is custom-collected to answer a unique question or train a particular AI model. Practical examples include conducting customer surveys to gauge interest in a new feature or capturing thousands of images to train a unique object detection algorithm.

The significant advantage of primary data is its direct relevance. The downside is that collecting it can be expensive and time-consuming.

In contrast, secondary data is information someone else has already collected for a different reason. This includes most public datasets, government statistics, and market research reports. While much cheaper and faster to obtain, secondary data may not be a perfect fit for your project and will likely require significant cleaning and adaptation.

For example, a model built to answer complex customer questions might start with a pre-existing public dataset as a foundation. However, to truly excel, it needs to be enriched with primary, company-specific data. This is where techniques discussed in our guide on Retrieval-Augmented Generation become critical, showing how models can pull from both established and proprietary knowledge to give accurate, context-aware answers.

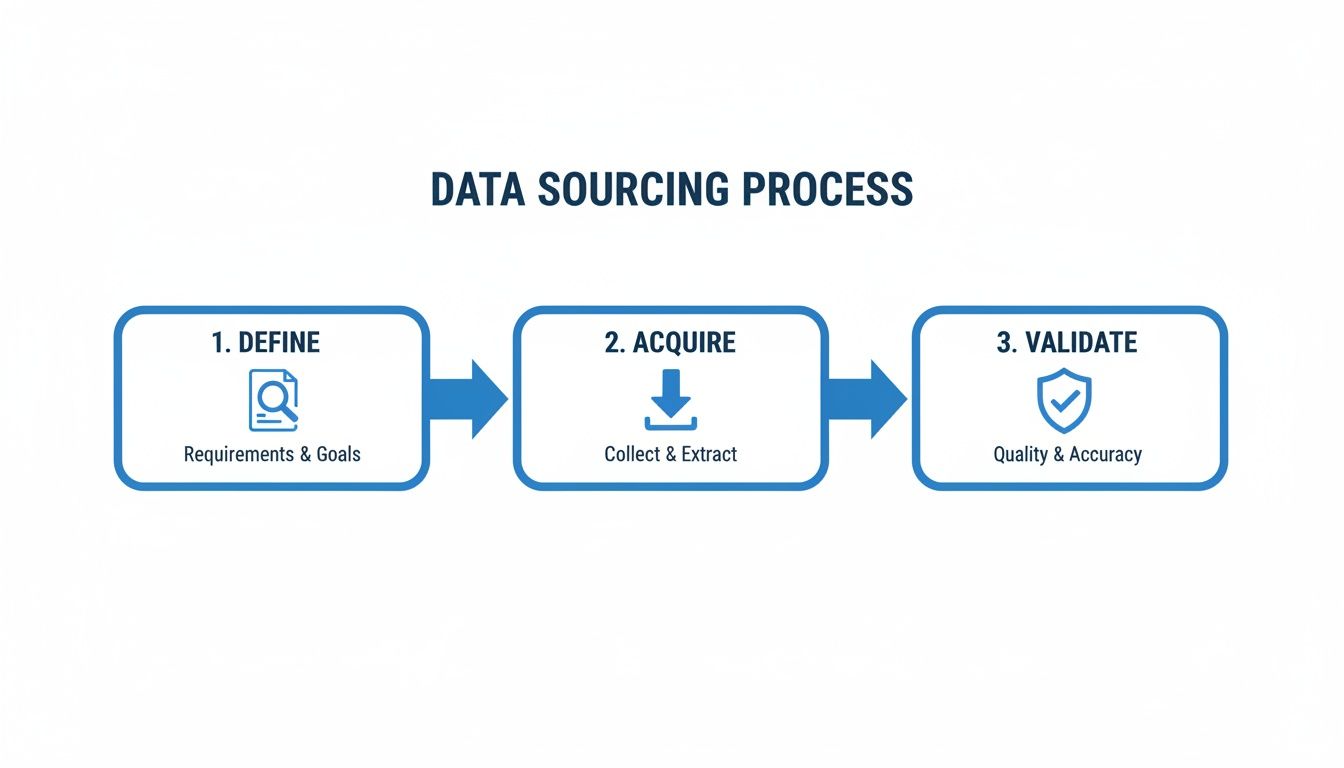

Your Step-by-Step Data Sourcing Workflow

Great AI does not happen by accident. It is built on a foundation of clean, relevant, and well-managed data. Effective data sourcing is not just a one-off task; it is a structured, repeatable workflow that takes you from a project idea to a model-ready dataset.

Following a clear process is the best way to prevent costly errors, root out hidden bias, and ensure the final data aligns perfectly with your model's goals. Think of it as a blueprint for building high-quality AI. Each stage builds on the last, creating a clear chain of accountability for data quality from start to finish.

Let's walk through the key stages of a typical data sourcing workflow.

Stage 1: Defining Your Data Requirements

Before you can find the right data, you must know exactly what you are looking for. This first stage is about translating your business objectives into specific data needs. It is where you get granular and ask the tough questions that guide the entire sourcing effort.

Assemble your team and answer these critical questions:

- What problem is the AI model trying to solve? Is it predicting customer churn, identifying manufacturing defects, or automating document processing?

- What data attributes are needed for accurate predictions? This could range from customer tenure and product usage to machine sensor readings and timestamps.

- What is the right volume of data? How much do you need to train the model effectively without collecting more than necessary?

- What must our data represent? Do we need specific demographic or geographic samples to ensure our model is not biased?

Nailing this step prevents you from wasting time and money chasing down irrelevant data. It is also where you must start considering potential licensing issues or compliance constraints that could become roadblocks later.

Stage 2: Identifying and Vetting Sources

With clear requirements in hand, the hunt for data begins. This is where you identify potential internal and external sources that match your needs. But finding a source is not enough; you must vet each one rigorously. Think of this as a critical quality checkpoint.

When evaluating a potential data source, measure it against these criteria:

- Accuracy and Completeness: Does the data have a high volume of missing values or obvious errors? Is it reliable?

- Timeliness: Is the data recent enough to be relevant? Stale data can degrade a model’s performance.

- Licensing and Usage Rights: Does the license permit commercial use for your specific application? Avoid legal complications.

- Potential for Bias: Does the dataset underrepresent certain groups? If so, your model’s performance will be skewed from the start.

This meticulous vetting helps you filter out low-quality or legally problematic sources before you invest real resources in acquiring them.

Stage 3: Acquisition and Annotation

Once you have vetted and selected your sources, it is time to bring the data in-house and prepare it for your model. Data acquisition can be as simple as a file download or as complex as an ongoing API integration.

However, raw data is almost never ready for machine learning right out of the box. This is where data annotation comes in.

Annotation, or labeling, is the process of adding context to raw data so that a machine learning model can understand it. For example, you might draw bounding boxes around cars in images for an object detection model or tag customer feedback with sentiment labels like "positive" or "negative."

The quality of your annotation has a direct and massive impact on your AI’s accuracy. That is why a robust AI quality assurance process is not an afterthought; it is something you build into the workflow from day one.

After all, the global data analytics market is projected to hit over USD 302 billion by 2030, a boom driven entirely by the need for reliable data to power these models. You can dig into the numbers in the full research on data analytics. This explosive growth highlights just how critical high-quality inputs are, reinforcing the need for a structured workflow that guarantees data integrity from the very beginning.

Getting Data Quality and Governance Right

In the world of AI, it is a classic case of quality over quantity. You can have all the data in the world, but if it is messy, biased, or incorrect, your AI model is doomed from the start. This is why data quality and data governance are not just buzzwords; they are the absolute foundation of any trustworthy AI system.

Think of low-quality data as a poison seeping into your project. It inevitably leads to flawed models, skewed predictions, and poor business decisions. Getting your data right means building a solid framework to ensure it is accurate, consistent, and handled ethically from day one.

The journey from raw data to a validated asset is a deliberate process, not an afterthought.

As you can see, validation is not just a final check; it is the critical gatekeeper that ensures only high-quality data enters your AI pipeline.

The Cornerstones of a Trustworthy Dataset

Imagine data quality as a three-legged stool. If any one of those legs is weak, the whole thing topples over. To build a dataset you can rely on, you need to master these three core principles:

- Accuracy: Does the data reflect reality? For an e-commerce model, this is non-negotiable. Product prices, inventory counts, and customer addresses must be spot-on, or the model's outputs will be useless.

- Completeness: Are there major gaps in your dataset? Missing values are like blind spots for an algorithm. A complete dataset gives the model a much clearer picture to learn from, preventing it from making assumptions based on incomplete information.

- Consistency: Is the data uniform everywhere it appears? A customer's name and address should look the same in your CRM, sales logs, and support tickets. Inconsistency creates duplicates and confusion, tripping up your model.

Achieving this level of quality does not happen by accident. It requires a robust framework of automated checks, manual reviews, and clear standards that every single data point must meet.

Navigating Compliance and Ethical Handling

Beyond being clean, your data must be compliant. This is where data governance comes in, covering the legal and ethical rules of the road for your data strategy. Regulations like Europe's GDPR and California's CCPA have sharp teeth, setting strict rules for how personal data is collected, stored, and used.

Failing to comply is not just a slap on the wrist. Under GDPR, fines can reach up to 4% of a company’s annual global turnover. That makes robust compliance a critical business function, not a box-ticking exercise.

But the responsibility does not end with legal compliance. Ethical data handling is about fairness and transparency. It means being upfront about how you use data and actively working to root out biases that could lead to unfair outcomes. For instance, a loan approval AI trained on historically biased data will only perpetuate that same discrimination.

Ethical sourcing demands that you seek out diverse, representative datasets. It is about building responsible AI that serves everyone, not just the majority represented in a flawed dataset. A secure and ethical foundation is not just good practice; it is essential for building a sustainable, trustworthy AI program.

How to Choose the Right Data Sourcing Partner

Finding the right data for your AI project is one thing. Finding the right partner to help you source it is something else entirely. Handing off your data requirements can massively speed up your AI initiatives, but only if you find a partner that operates like an extension of your team, not just another vendor on an invoice.

Making this choice is a strategic move that will ripple through your entire project, affecting everything from model accuracy and your timeline to the final budget. A top-tier partner brings more than just datasets; they offer deep industry knowledge, a scalable infrastructure, and transparent quality control. Crucially, they understand data privacy and compliance inside and out, protecting you from risk while ensuring your AI is built on a solid ethical foundation.

Distinguishing a Partner from a Provider

It is easy to get this wrong. A simple data provider sells you a dataset. A true strategic partner invests in your project’s success. They dig in to understand your business problem and deliver a solution that actually solves it, not just a folder of files.

This distinction is critical, especially for complex, high-stakes AI systems. A partner offers ongoing consultation and improvement, not a one-and-done transaction. More companies are catching on. The data analytics outsourcing market is expected to hit USD 47.65 billion by 2030, growing at a blistering compound annual growth rate of 34.33%. This explosion shows just how vital strategic outsourcing has become for companies looking to manage complex data challenges while boosting efficiency. You can dive deeper into the numbers in this detailed market analysis.

Before we dig into specific qualities, let’s frame the difference between a transactional provider and a true strategic partner. It is a mindset shift that separates successful AI projects from the ones that get stuck in endless rework.

Strategic Partner vs Data Provider: What to Look For

| Attribute | Strategic Partner (e.g., Prudent Partners) | Simple Data Provider |

|---|---|---|

| Relationship | Collaborative, consultative, and long-term. They act as an extension of your team. | Transactional and short-term. The goal is to complete the sale. |

| Expertise | Deep domain knowledge in your specific industry (e.g., healthcare, finance). | Generalist approach, often lacking industry-specific context. |

| Quality | Multi-layer QA, transparent reporting, and a commitment to continuous improvement. | Basic quality checks with little to no transparency into their process. |

| Scalability | Flexible infrastructure and workforce that grows with your project's needs. | Fixed capacity, which can create bottlenecks as your project scales. |

| Focus | Solving your business problem with customized data solutions. | Selling pre-packaged or off-the-shelf datasets. |

| Compliance | Proactively manages privacy, security, and regulatory requirements (HIPAA, ISO). | Places the compliance burden entirely on you, the customer. |

Ultimately, a simple provider gives you what you ask for. A strategic partner helps you figure out what you really need to succeed.

Key Qualities of a Strategic Partner

When you are vetting potential partners, you need to look beyond the sales pitch. Focus on concrete attributes that signal a commitment to quality and genuine collaboration.

- Deep Domain Expertise: Do they understand your industry? A partner with experience in healthcare, for instance, will not need you to explain HIPAA or the complexities of medical image annotation. They already know.

- Transparent Quality Assurance: How do they prove their accuracy claims? Insist on seeing their multi-layer QA process and ask for clear reports on quality metrics. This is a deal-breaker for any serious AI project.

- Scalable Infrastructure: Can they keep up as your project takes off? A solid partner has the technology and a trained workforce ready to scale up or down based on your needs, all without letting quality slide.

A partner's value is measured not just by the data they provide, but by the problems they help you solve. Their ability to integrate seamlessly into your workflow and adapt to your goals is what drives real business impact.

In the end, the goal is to find a partner who can deliver high-quality, ethically sourced data that is perfectly suited for your model. This often involves complex and nuanced tasks, where a partner with proven experience in custom data annotation services can be the difference between success and failure. Choosing wisely is an investment in a relationship that will power your AI innovation for years to come.

Common Questions About Data Sourcing

Even with a clear strategy, a few common questions always arise once teams begin the process. Let's tackle some of the most frequent points of confusion to help you move forward with confidence.

Getting these details right is key to building your AI project on a foundation that is solid, compliant, and ethically sound.

What Is the Difference Between Data Sourcing and Data Mining?

It is easy to confuse these two terms, but they represent distinct stages of the AI development process. A simple cooking analogy can clarify the difference.

- Data sourcing is the grocery shopping. This is the active hunt for the raw ingredients (your data) needed for a specific recipe (your AI model). The entire focus is on finding, acquiring, and preparing the right materials.

- Data mining is the cooking. This part happens after you have all your ingredients. It is the analytical step where you explore the data you have gathered to uncover hidden patterns, surprising trends, and valuable insights.

In short, data sourcing comes first. It provides the raw materials that data mining later transforms into intelligence.

How Can You Ensure Data Is Sourced Ethically?

Ethical data sourcing is not a "nice-to-have"; it is a non-negotiable part of responsible AI. It boils down to three core pillars that protect people and build trust in your technology. Any trustworthy partner will have a clear governance framework to uphold these standards.

The pillars are:

- Compliance: This means strictly following all relevant privacy laws, like GDPR or HIPAA. It involves getting proper user consent, anonymizing personal information, and maintaining secure data handling from start to finish.

- Transparency: You must be upfront about where your data comes from and how it was collected. Hiding sourcing methods is a significant red flag that undermines the integrity of your entire project.

- Fairness: This is about actively working to minimize bias by gathering data from diverse and representative sources. The goal is to build a dataset that reflects the real world, not one that reinforces historical inequalities.

Can You Use Public Data for Commercial AI Projects?

The short answer is yes, but you must be incredibly careful. Public datasets can be a fantastic starting point, but they are not a free-for-all when it comes to commercial use. The single most important factor is the data license.

Many public datasets come with specific terms that spell out exactly how they can be used. Some are completely open for any purpose, including commercial projects. Others are restricted to academic or non-commercial research only. Using a dataset in a way that violates its license can lead to serious legal trouble and financial penalties.

Before you integrate public data into a commercial AI pipeline, it is absolutely essential to have a legal expert or a knowledgeable data sourcing partner review its license. This simple due diligence step protects your project and your entire organization from preventable legal risks.

Navigating these complex licensing rules is a perfect example of where an expert partner provides immediate value. They can help you identify compliant datasets and ensure your project stays on the right side of the law.

Building a successful AI initiative starts with a world-class data foundation. At Prudent Partners, we specialize in providing high-accuracy, ethically sourced, and fully compliant data solutions that empower your models to perform at their best.