Think of an LLM's context window as its short-term memory. It is the digital workspace where the model holds all the information you provide, whether a single question or a hundred-page legal contract. This capacity dictates how much information a large language model can process at once, directly impacting its ability to handle complex tasks.

Decoding the LLM Context Window

At its core, the context window is what allows a large language model to "see" and "remember" during a single conversation. Imagine trying to give instructions to an assistant who can only recall the last five words you said. Their ability to follow complex, multi-step directions would be severely limited.

This is the exact problem the context window solves for an AI. It defines the total amount of text your input (prompt) plus the model's generated output (completion) that the AI can consider at any given moment. But this "memory" is not measured in words or pages. It is measured in tokens.

Tokens are the fundamental building blocks of text for an LLM. A single token might be a whole word like "apple," a part of a word like "ing," or even just a comma. As a general rule, 100 tokens translate to about 75 words.

Why the Context Window Is a Game-Changer

The size of the context window directly shapes an AI's real-world capabilities and, by extension, its business impact. A larger window opens the door to more sophisticated applications, while a smaller one forces engineers to develop creative workarounds. The implications for accuracy, cost, and overall performance are significant.

The ability to process more information in a single pass means the model can maintain conversational flow, understand intricate relationships within long documents, and generate more coherent and relevant outputs. It is the key to moving from simple Q&A bots to sophisticated reasoning engines.

Let's look at a few practical examples from real-world use cases:

- Customer Support: With a large context window, a chatbot can review a customer's entire conversation history on the fly. This means it can provide truly personalized support without asking the same questions repeatedly, improving first-contact resolution rates and customer satisfaction.

- Legal Document Analysis: A lawyer can upload a full contract and ask an LLM to identify key clauses, summarize obligations, and flag potential risks in one pass. This task would be impossible with a small window, demonstrating a measurable impact on efficiency and accuracy in legal reviews.

- Software Development: A developer can paste a large code block and ask the AI to find bugs, suggest optimizations, or write documentation based on the full context of the application, accelerating development cycles and improving code quality.

Understanding these dynamics is the first step toward building reliable and efficient AI solutions. While larger windows offer incredible power, they also introduce critical trade-offs in cost, speed, and even accuracy. Mastering the context window is about balancing these factors to achieve high-quality AI performance that drives tangible business results.

The Explosive Growth of Context Windows

The evolution of the LLM context window has been nothing short of remarkable. Just a few years ago, models could handle a few pages of text, equivalent to a short story. Today, leading models can process entire novels or massive codebases in a single pass. This is not just an incremental improvement; it is a fundamental shift in AI capabilities.

This rapid expansion is not happening in a vacuum. It is a direct response to the demands of businesses and developers. We need AI that can reason over large, unstructured datasets without losing critical context. Whether sifting through dense financial regulations or analyzing a decade of patient records, the need for a larger "short-term memory" has become a major driver in AI development.

From a Few Pages to Entire Libraries

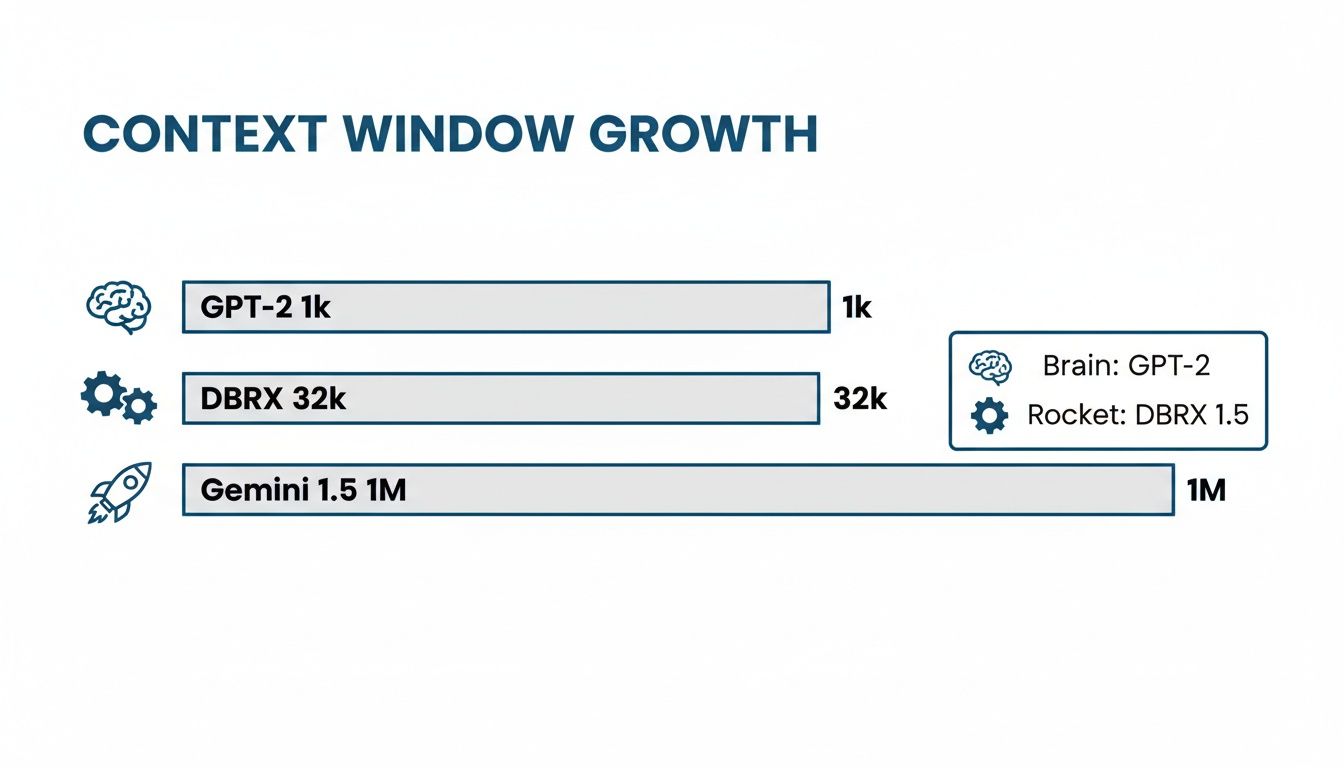

The numbers behind this leap are staggering. We have gone from a mere 1,024 tokens in early models like GPT-2 in 2019 to millions of tokens today. Google’s Gemini 1.5 Pro, for instance, made headlines in early 2024 with its 1 million token window.

This trend is not limited to closed-source giants. Open-source models are catching up fast. Llama's first version topped out at 2,000 tokens, but by 2024, models like Mixtral and DBRX reached 32,000, a 16x increase. These larger windows have real-world payoffs, like cutting API calls by up to 80% in some automated workflows. That means lower costs and better reliability, which is a game-changer for startups and specialized fields like geospatial risk analysis. For a deeper dive, there's valuable research on how context size impacts performance and the new challenges it creates.

To put it in perspective, here's how the scale has changed:

- Early Models (e.g., GPT-2): ~1K tokens. Like remembering the last few minutes of a conversation.

- Mid-Generation Models (e.g., Claude 1): ~100K tokens. Enough to process an entire novella.

- Current-Generation Models (e.g., Gemini 1.5 Pro): ~1M tokens. Capable of reading the entire Lord of the Rings trilogy and then answering questions about it.

Tasks that felt impossible just a year or two ago are now becoming routine, demonstrating scalable impact.

A larger context window transforms an AI from a simple Q&A bot into a strategic partner. It can finally grasp the deep, multifaceted problems that businesses actually face, paving the way for much smarter decision-making.

What Is Fueling the Expansion?

So, why the sudden explosion in size? It comes down to one thing: the demand for more complex, multi-step reasoning. Consider a financial firm that needs an AI to read an entire annual report, footnotes and all, to provide a credible risk assessment. Or a doctor who needs a system that can process a patient's complete medical history to flag potential drug interactions.

These are not tasks you can solve with a small context window. In the past, developers had to rely on cumbersome workarounds like chunking documents or creating summarization chains. While these methods worked, they were often brittle and could easily miss critical details.

The push for larger native context windows is about solving this problem at the source by letting the model see the whole picture at once. But as we will see, this new power comes with its own set of trade-offs, from higher costs and slower response times to the strange phenomenon of information getting "lost in the middle."

How Window Size Impacts AI Performance and Cost

While a massive LLM context window seems like the perfect solution for complex tasks, it creates a tricky balancing act between performance, cost, and accuracy. This is often called the ‘context window problem’, a scenario where simply making the window bigger can create more challenges than it solves. Processing enormous inputs is not a free lunch; it comes with real business consequences.

Expanding the context window directly affects three key metrics: operational cost, response latency, and output accuracy. Every token processed comes with a price tag, and with windows now stretching into the millions of tokens, API bills can spiral out of control. Likewise, asking a model to sift through a mountain of information demands more computational power, leading to slower response times that can degrade the user experience.

The Trade-Off Between Size and Speed

Imagine a financial services firm using an AI to analyze quarterly earnings reports. A model with a huge context window could process the entire 100-page document at once to extract key insights. The catch? The time it takes to generate that summary could be several minutes, far too slow for a real-time investment analysis tool.

This latency becomes even more critical in customer-facing applications. A support bot that takes a full minute to read a long customer history before responding will only lead to frustration and churn. The goal is to find the sweet spot where the context is large enough for the task but small enough for acceptable performance.

This chart shows the dramatic explosion in context window sizes, highlighting the leap from early models to today’s massive capacities.

This massive growth is exactly why managing the ripple effects on cost and performance has become a central challenge for AI teams.

The Hidden Costs of Accuracy in Large Contexts

Beyond speed and cost, a larger context window can paradoxically cause a drop in accuracy. Two well-known phenomena are to blame: ‘context rot’ and the ‘lost in the middle’ effect. Models can sometimes lose track of information buried deep within a very long input, much like a person forgetting a detail mentioned at the start of a two-hour meeting.

This challenge is a major hurdle for enterprises. While long-context LLMs promise new efficiencies, they often stumble when a 1 million token limit meets a corporate code repository spanning billions of tokens. The historical progression is clear: GPT-2 started with just 1k tokens, while 2024 models like Gemini 1.5 hit 1M. Yet, in practical applications like Retrieval-Augmented Generation (RAG), recall often degrades past the 8k token mark for many models.

When a model fails to retrieve a critical piece of information from the middle of a long document, the entire output can be rendered useless. This is not just an inconvenience; for applications in healthcare or finance, it is a significant business risk.

This is where the quality of your underlying data becomes non-negotiable. A model trained on meticulously labeled and validated datasets is far more likely to maintain its accuracy even as the context grows. Understanding that data quality is the real competitive edge in AI is the first step toward building systems that are both powerful and dependable. High-quality data acts as a stabilizing force, mitigating the risks of context rot and ensuring your AI's outputs remain trustworthy.

For example, an AI processing lengthy patient histories must accurately recall details about allergies or past conditions, regardless of where they appear in the text. A failure to do so could have severe consequences. This highlights the absolute need for a robust data annotation and quality assurance pipeline to validate that the model is performing as expected across its entire context window.

Impact of Context Window Size on Key Business Metrics

Making smart, informed decisions about your AI architecture requires a clear understanding of these performance trade-offs and their impact on your total cost of ownership. The table below breaks down how different window sizes affect the bottom line.

| Metric | Small Context Window (e.g., 4K tokens) | Medium Context Window (e.g., 32K tokens) | Large Context Window (e.g., 200K+ tokens) |

|---|---|---|---|

| Cost | Low and predictable. Ideal for high-volume, simple tasks. | Moderate. Balances capability with budget for complex summaries or Q&A. | High and potentially volatile. Reserved for specialized, high-value tasks. |

| Latency | Very fast. Suitable for real-time user interactions like chatbots. | Slower. Acceptable for back-end analysis but may lag in direct user-facing roles. | Significant. Often too slow for real-time applications; better for offline batch processing. |

| Task Complexity | Limited to short conversations or single-document analysis. | Can handle multi-document summaries and moderately complex reasoning. | Capable of processing entire codebases, research papers, or lengthy legal contracts. |

As you can see, there is no one-size-fits-all answer. The "right" context window depends entirely on your specific use case, budget, and performance requirements.

Effective Context vs. Advertised Limits

The race for the largest LLM context window has created some truly astonishing numbers, with models now claiming capacities of a million tokens or more. While these figures look fantastic on a spec sheet, they hide a critical truth: there is a huge, often dramatic gap between the advertised limit and a model’s actual, real-world performance.

This is the gap where projects fail. It is where AI quality assurance becomes absolutely non-negotiable.

Put simply, a model’s ability to reliably use information does not just grow bigger and better with its context window. As the input gets longer, models start to struggle. Key details get lost in the noise, reasoning becomes shaky, and the accuracy of the output degrades long before you ever hit that theoretical token limit.

This reality has given rise to the concept of the Maximum Effective Context Window (MECW). This is not the number on the box; it is the practical, battle-tested threshold where a model can consistently retrieve and reason over information with high accuracy. Pushing a model beyond this effective limit is not just a technical problem; it is a business risk that leads to bad outputs and flawed decisions.

The Needle in a Haystack Problem

So, how do we measure this performance drop? Researchers developed a clever benchmark called the "Needle-in-a-Haystack" test. The idea is simple: you embed a specific piece of information (the "needle") at different depths within a massive volume of irrelevant text (the "haystack") and then ask the model to find it.

The results are often shocking, revealing the practical limits of even the world’s best models.

Many models perform well when the critical information is at the beginning or the very end of the context window. But their performance often plummets when that needle is buried somewhere in the middle. This “lost in the middle” problem proves that a model's attention mechanism is not perfect. It can lose focus across vast stretches of text, effectively forgetting critical data points it just read.

For any AI leader, this is a wake-up call. Relying on advertised numbers is like assuming a race car can hold its top speed indefinitely, regardless of road conditions. The reality is far more complicated.

The true measure of an LLM's capability is not its maximum context size, but its ability to maintain high accuracy across that entire window. Without rigorous validation, you are operating on faith, not facts.

Quantifying the Performance Cliff

Recent studies show just how steep this performance cliff can be. Despite those massive advertised context windows, the real-world Maximum Effective Context Window can shrink to just 1-10% of the claimed size. Some models even see a 99% drop in accuracy before hitting their advertised limits.

Alarming research that analyzed hundreds of thousands of data points found that even elite models could fail on certain problems with as few as 100 tokens of context. Similarly, comprehensive tests confirm that performance for leading models like Llama-3.1-405B and GPT-4 starts to degrade after 32k and 64k tokens, respectively. You can dive deeper into these findings by exploring the full research on context window effectiveness.

This paradox, where accuracy falls as context grows, often comes down to issues like attention saturation. The model's attention mechanism gets overwhelmed by the sheer volume of tokens, making it impossible to correctly determine what is important.

This is not a minor bug; it is a fundamental limitation that demands a strategic response. It highlights the non-negotiable need for robust AI quality assurance, where human experts meticulously validate model outputs. That human-in-the-loop process is the only way to ensure your business decisions are based on dependable results, not hidden failures masked by impressive marketing numbers.

Practical Strategies for Managing Long Inputs

Knowing the limits of an LLM context window is one thing, but actually working around them is an entirely different challenge. The good news is that plenty of proven engineering and design strategies can turn these theoretical constraints into practical, high-performing solutions. Moving from theory to action, these techniques are essential for building reliable AI that can handle real-world data without sacrificing accuracy or efficiency.

Each strategy offers a different way to manage long inputs, making sure the model gets exactly the right information at the right time. They are not mutually exclusive, either. Most robust, scalable systems combine several of these techniques to achieve optimal results.

Precision Through Prompt Engineering

The most direct and often simplest strategy is prompt engineering. Think of it as the art and science of writing instructions that guide the model to produce the desired output using as few tokens as possible. Instead of just passing a long, unstructured document to the LLM, a well-designed prompt can ask for specific details, pre-summarize the content, or instruct the model to ignore irrelevant sections entirely.

For example, imagine a legal team reviewing a 50-page contract. They do not need the model to read every boilerplate clause. A sharp, precise prompt can instruct the AI to find and analyze only the sections covering indemnification and liability, which dramatically slashes the token count and boosts both response time and accuracy.

Document Chunking and Retrieval-Augmented Generation (RAG)

When you are dealing with massive knowledge bases that dwarf even the largest context windows, document chunking is your best friend. This technique involves breaking down huge documents, like a complete e-commerce product catalog or a library of medical research, into smaller, meaningful pieces or "chunks."

These chunks are then stored in a specialized database, ready to be retrieved when needed. This is the first step toward a far more powerful strategy: Retrieval-Augmented Generation (RAG). RAG essentially gives the LLM access to external, up-to-date knowledge without trying to cram it all into the prompt.

When a user asks a question, the RAG system first finds the most relevant document chunks and then feeds only that essential information into the LLM's context window along with the original query. This transforms the model from a closed-book exam taker into an open-book researcher.

This method is incredibly effective and scalable. For instance, an e-commerce chatbot can use RAG to pull specific product details from a catalog of thousands of items to answer a customer’s question accurately. It finds the right "chunk" of information instead of trying to read the whole catalog at once. You can learn more about building these systems in our guide to Retrieval-Augmented Generation.

Advanced Techniques for Dynamic Data

Beyond basic chunking, you will need more advanced methods for handling data that is constantly changing or streaming in. These approaches provide sophisticated ways to manage the flow of information into the context window, ensuring the model stays focused on what is most relevant at the moment.

Here are a few key advanced strategies:

- Sliding Window: This is perfect for processing continuous data streams, like a real-time transcript of a meeting or logs from a network security system. The context window "slides" over the data, always keeping the most recent information in focus while dropping the oldest. This gives the model the immediate context it needs for tasks like live summarization or anomaly detection.

- Summarization Chains: For exceptionally long documents, a multi-step summarization process works wonders. First, the document is chunked, and the LLM creates a summary of each chunk. Then, those summaries are combined and summarized again. This pyramid-like process continues until you have a concise final summary of the entire document, distilling pages of text into a manageable input.

- Stateful Memory Patterns: In long, back-and-forth conversations, it is critical for the AI to remember what was said earlier. A stateful memory pattern involves creating a separate, evolving summary of the conversation. This summary, capturing key names, decisions, and user preferences, is fed back into the prompt at each turn, giving the LLM a persistent "memory" without overflowing the context window.

The Critical Role of Data Annotation in a Long Context World

As the LLM context window gets bigger, the demand for precise data annotation and rigorous quality assurance has never been higher. Why? Because longer inputs amplify the risk of subtle errors, biases, and hallucinations that automated checks simply cannot catch. This is where human-in-the-loop validation transitions from a "nice-to-have" to an operational necessity for building AI you can actually depend on.

Without meticulous human oversight, a massive context window can quickly become a liability. Imagine a model that correctly summarizes the first ninety pages of a legal contract but completely misinterprets a critical clause on page ninety-one. Or one that accurately tracks customer sentiment through a long feedback thread before hallucinating a complaint that never even happened.

These are the high-stakes, nuanced failures that only trained human analysts can consistently spot and rectify.

Turning Potential Liabilities into Trusted Assets

Building reliable, scalable AI performance in a long-context world is not just about the model's architecture; it is about the quality of the data that fuels it. High-accuracy data annotation and systematic QA provide the ground truth needed to fine-tune and validate these incredibly powerful models.

This process involves experts who meticulously review and label long-form content to ensure the AI's outputs are factually correct, contextually appropriate, and free from harmful biases. For example, a trained analyst can validate a model's summary of a lengthy medical research paper, ensuring no critical findings were glossed over or distorted.

Investing in human-led data quality assurance is the most direct way to build trust in your long-context AI applications. It transforms the model from a powerful but unpredictable tool into a dependable asset that consistently drives accurate business decisions.

The Foundation of High-Quality AI

Meticulous annotation and validation are fundamental to managing the risks that come with an expanded LLM context window. It ensures the model learns from clean, accurate, and relevant examples, which directly improves its ability to handle complex, long-form information without faltering.

Key areas where expert annotation makes a real, measurable impact include:

- Validating Factual Accuracy: Cross-checking summaries, extractions, and analyses of long documents against the source material to guarantee correctness.

- Detecting Nuanced Errors: Identifying subtle shifts in sentiment, tone, or meaning that automated systems are almost certain to miss within extended conversations or reports.

- Ensuring Guideline Adherence: Enforcing brand voice and safety protocols across lengthy generated content to maintain consistency and quality.

Building a robust AI system starts with clear and comprehensive labeling instructions. To see how structured guidelines create a foundation for accuracy, explore our detailed approach to crafting effective annotation guidelines. This structured approach ensures that human expertise is applied consistently, leading to datasets that produce truly reliable and scalable AI performance.

Frequently Asked Questions About the LLM Context Window

Development teams often encounter the same critical questions when working with an LLM's context window. Getting practical, clear answers is the key to building AI applications that perform well and deliver real business value.

How Do I Choose the Right Context Window?

Picking the right context window size is always a trade-off between capability, cost, and speed. There is no magic number here; the best choice comes down to what your application needs to do.

- For real-time chatbots or simple Q&A: A smaller window (4K to 16K tokens) is usually your best bet. It keeps latency low and operational costs down, which is exactly what you need for a responsive user experience in high-volume situations.

- For complex document analysis or multi-turn conversations: You will need a medium to large window (32K to 200K+ tokens). This gives the model enough room to process a significant amount of information, like summarizing a dense report or remembering the details of a long conversation.

The goal is to provide the model with just enough context to be accurate without paying for unused capacity or making users wait. Running a pilot project is an excellent way to benchmark performance against cost and find the optimal balance for your specific use case.

What Is the Difference Between RAG and a Large Context Window?

While both help an LLM work with information, they do so in completely different ways. Think of a large context window as giving a person an entire book to read right before you ask them a question. All the information is available in their short-term memory at once.

In contrast, Retrieval-Augmented Generation (RAG) is like giving that person a library card instead. The system first finds the single most relevant page or paragraph from the library (your knowledge base) and hands just that piece to the model. This makes RAG incredibly scalable and cost-effective because it keeps the context window focused only on what is essential, neatly avoiding the "lost in the middle" problem.

How Does Data Quality Affect Long-Context Model Performance?

Data quality is everything, especially as the context window gets larger. If you feed a model low-quality data full of noise, inconsistencies, or mistakes, its ability to reason over long inputs will be seriously degraded. It is that simple.

High-quality, carefully annotated data acts as a guide, teaching the model how to identify and prioritize the most important information. For instance, a model trained on clean, well-structured financial reports will be far more reliable at summarizing new ones than a model trained on a messy, unlabeled data dump. This is why expert AI quality assurance is not just a final check; it is a core part of building any long-context AI system you can actually depend on.

At Prudent Partners, we specialize in providing the high-accuracy data annotation and AI quality assurance needed to build dependable, scalable AI solutions. Our expert teams ensure your models perform reliably, turning complex data into trusted business outcomes.

Ready to enhance your AI's accuracy and performance? Connect with us today for a customized solution.