Annotation services turn raw, messy data like images, text, or audio files into structured fuel for AI algorithms. Think of it as the "teacher" for your machine learning model, providing the ground truth examples it needs to learn and make accurate predictions.

Unlocking AI Potential With Annotation Services

An AI model is like a brilliant student getting ready for a big exam. No matter how smart they are, they cannot pass without textbooks, study guides, and corrected homework. Annotation services provide exactly that for machine learning algorithms. Raw data, like a chaotic library full of unlabeled books, is useless to an AI until the annotation process organizes and explains it.

This transformation is the very heart of supervised learning, where an algorithm learns to map an input to an output by studying thousands of labeled examples. Without this critical step, even the most powerful AI could not tell a car from a pedestrian or spot a cancerous cell in a medical scan. This process ensures your AI models deliver measurable impact and real-world results.

The Foundation of High-Performing Models

The quality of your training data is the single biggest factor determining how well your AI model will perform in the real world. Annotation services are the engine that produces this data, applying human intelligence to tasks that machines still cannot handle reliably.

Here’s where that human touch makes all the difference:

- Providing Context: People understand nuance, cultural context, and ambiguous situations that would completely stump an algorithm. For example, identifying sarcasm in a customer review or recognizing a brand logo that is partially hidden in a photo requires human judgment. This is a practical example of where human-centered annotation provides actionable insights.

- Ensuring Accuracy: Professional annotators work with strict guidelines and multi layered quality checks to deliver datasets with accuracy rates often hitting 99% or higher. This level of precision is what prevents the classic "garbage in, garbage out" problem, where bad training data creates an unreliable AI.

- Achieving Scale: Labeling a few hundred images might be manageable in house. But scaling to thousands or even millions of data points? That requires a dedicated workforce and a finely tuned workflow, which is exactly what specialized providers bring to the table. This scalability is a key advantage of partnering with an expert firm.

Fueling a Rapidly Growing Industry

As more industries jump into AI, the demand for high quality labeled data is exploding. The global data annotation market was valued at USD 2.2 billion and is expected to grow at a compound annual growth rate (CAGR) of 27.4% through 2033. This incredible growth, highlighted by firms like Cognitive Market Research, shows just how essential these services have become.

Ultimately, annotation services bridge the gap between raw information and true machine intelligence. By partnering with experts, you ensure your AI models are built on a solid foundation of accuracy, reliability, and contextual understanding. If you are just starting out, our guide on what data labeling is is a great place to dig deeper into the fundamentals.

Exploring Core Types of Data Annotation

Data annotation is not a one size fits all process. It is a whole collection of specialized techniques, and the method you choose has to perfectly match both your data type and what you want your AI model to accomplish. The first step in picking the right annotation service is understanding which modality fits your project.

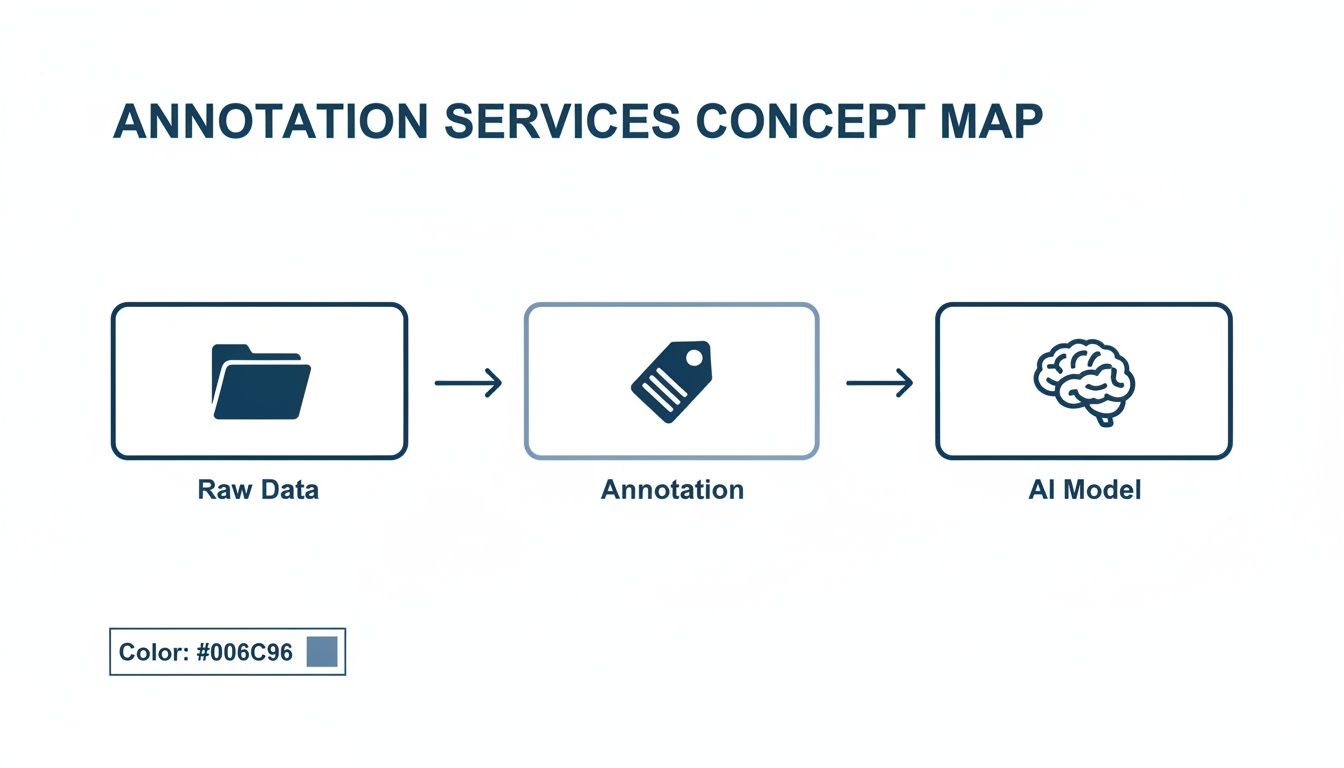

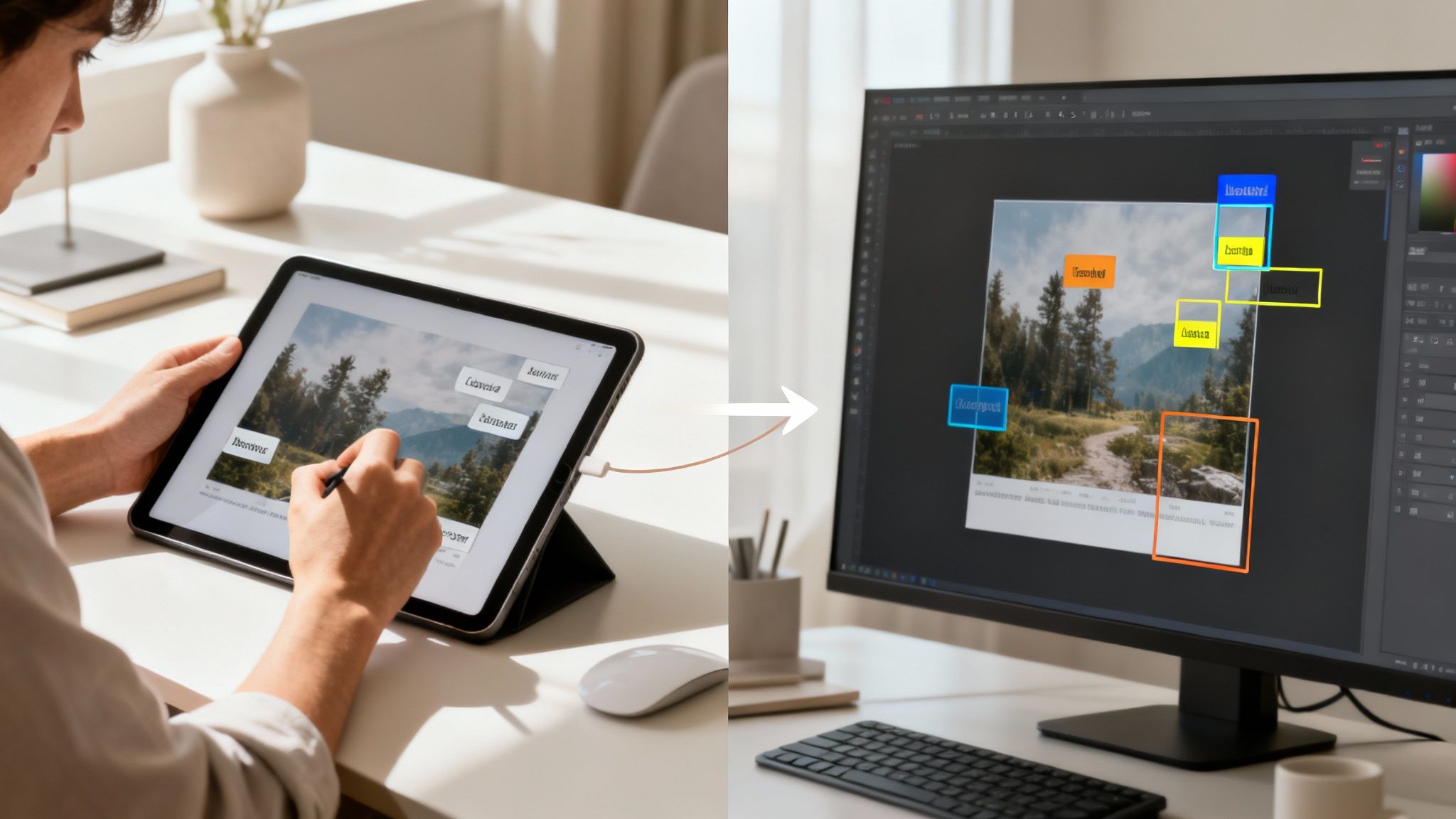

The path from raw, jumbled information to a trained AI model is actually a clear, structured journey. This visual map shows how unstructured data gets transformed through annotation into the high quality fuel that powers machine learning algorithms.

This workflow drives home a key point: the quality of your annotation is the critical bridge connecting raw data to a functional, accurate AI. Just like a chef needs precisely prepared ingredients, an AI model needs meticulously labeled data to learn anything useful.

Image and Video Annotation

Visual data is one of the most common types needing annotation, fueling countless applications from online shopping to advanced medical diagnostics. The entire goal here is to teach computer vision models to see and interpret the world just like we do.

For an e commerce site, this could mean using simple bounding boxes to draw rectangles around products in photos, training an AI to automatically categorize new inventory. But in a more complex medical scenario, a technique like semantic segmentation is needed. This involves outlining the exact shape of a tumor in a CT scan, pixel by pixel, which helps AI assist radiologists with early detection. Video annotation simply adds the element of time, tracking objects and actions frame by frame to analyze movement and behavior.

Text Annotation

Unstructured text like documents, reviews, and reports holds a ton of value, but AI needs a helping hand to grasp its meaning and context. Text annotation services add that crucial layer of interpretation.

A classic example is Named Entity Recognition (NER). Law firms use NER to train models that can scan thousands of contract pages in minutes to find and pull out key info like names, dates, and organizations. The time saved is enormous. Another powerful technique is sentiment analysis, where customer reviews are tagged as positive, negative, or neutral. This gives businesses a real time pulse on public perception, letting them respond to feedback almost instantly. You can dive deeper into the various types of annotation in our detailed guide.

Annotation is a challenging, complicated, and iterative process. You need someone who understands the data and who will dare to contradict if things do not make sense. This ensures the ground truth reflects reality, not just a superficial label.

Audio Annotation

From the voice assistant in your kitchen to sophisticated security systems, audio annotation is what trains models to understand spoken language and recognize specific sounds. And it is about a lot more than just simple transcription.

For virtual assistants like Alexa or Siri, annotators perform audio transcription to turn speech into text. But they also add important metadata, like identifying who is speaking, noting pauses, or tagging background noises. For a security application, a model might be trained to recognize the distinct sound of breaking glass. Annotators would label audio clips with this sound, carefully distinguishing it from other noises to build a reliable system that does not trigger false alarms.

LiDAR and 3D Point Cloud Annotation

This is a highly specialized field, but it is the absolute cornerstone of autonomous vehicle technology and modern geospatial analysis. LiDAR sensors create incredibly detailed 3D maps of an environment by bouncing lasers off objects, which generates a "point cloud" made of millions of data points.

Annotating this data is a complex, three dimensional puzzle. Experts use specialized tools to draw 3D cuboids around cars, pedestrians, and cyclists right within the point cloud. This is how a self driving car’s perception system learns to recognize and track every single object in its surroundings with pinpoint accuracy. This foundational work is what allows the vehicle to safely navigate complex, ever changing traffic, making it one of the most critical applications of data annotation today.

To help you connect these concepts to your own needs, here is a quick summary of the different annotation types and where they are most commonly used.

Comparing Data Annotation Types and Use Cases

This table breaks down each data annotation modality, showing the common techniques involved and the industries that rely on them. It is a great starting point for identifying which service aligns with your project goals.

| Annotation Type | Common Techniques | Primary Industry Use Cases |

|---|---|---|

| Image & Video Annotation | Bounding Boxes, Polygons, Semantic Segmentation, Keypoint Annotation | E-commerce, Healthcare (Medical Imaging), Autonomous Vehicles, Security & Surveillance, Agriculture |

| Text Annotation | Named Entity Recognition (NER), Sentiment Analysis, Text Classification | Finance (Fraud Detection), Legal (Contract Analysis), Customer Service (Chatbots), Healthcare (EHR Analysis) |

| Audio Annotation | Transcription, Speaker Diarization, Sound Classification | Voice Assistants, Call Centers (Quality Assurance), Security Systems (Sound Detection), Automotive (In-Car Voice Control) |

| LiDAR & 3D Point Cloud | 3D Cuboids, Point Cloud Segmentation, Sensor Fusion | Autonomous Vehicles, Robotics, Geospatial Mapping (GIS), Urban Planning, Augmented Reality (AR) |

Ultimately, understanding these core annotation types is the first step toward building a successful AI model. Each one provides a different lens through which your machine can learn to interpret the world, making the right choice absolutely essential for getting the results you need.

The Role of Quality Assurance in Data Annotation

Just labeling your data is not enough. Not even close.

What truly separates a powerful, trustworthy AI model from an expensive failure is the quality of those labels. Quality Assurance (QA) is the backbone of the entire data annotation process, acting as your safeguard against the classic "garbage in, garbage out" syndrome that dooms so many machine learning projects.

Without a solid QA framework, even tiny inconsistencies start to multiply, teaching your AI the wrong patterns. The result? Poor performance, biased outcomes, and zero confidence in the model's predictions. That is why professional annotation services for machine learning bake multi layered QA directly into their workflows from day one.

Core QA Methodologies for High Accuracy

To hit the kind of precision needed for production grade AI, top providers lean on proven methodologies designed to catch and correct human error before it pollutes your dataset. Think of them as different layers of validation often used together to build a comprehensive quality control system.

Two of the most effective models are:

- Consensus Model: The same piece of data gets labeled independently by several different annotators. If they all agree, it is a pass. If there is a disagreement, the data is kicked up to a senior expert for a final verdict. This is a fantastic way to catch subjective interpretation issues and build a strong, consistent accuracy baseline across the team.

- Review-Based Model: Here, a senior annotator or a dedicated QA specialist audits a sample of labeled data from each team member. This expert review spots systemic problems, provides targeted feedback to individuals, and makes sure everyone is following the annotation guidelines to the letter. It is the final gatekeeper ensuring the dataset hits the required quality bar.

These structured processes are fundamental to delivering datasets that achieve 99% accuracy or higher the non negotiable standard for high stakes applications like medical diagnostics or autonomous driving.

Annotation is a challenging, complicated, and iterative process. You need someone who understands the data and who will dare to contradict if things do not make sense. This ensures the ground truth reflects reality, not just a superficial label.

Building a Culture of Quality

Beyond specific models, a winning QA strategy is about creating a complete ecosystem focused on continuous improvement. It all starts with crystal clear, detailed annotation guidelines before the project even kicks off. From there, it is about ongoing training for annotators and using performance tracking tools to monitor key metrics.

At Prudent Partners, we use our proprietary Prudent Prism platform to give clients a transparent window into:

- Individual Annotator Accuracy: We track performance to see who is knocking it out of the park and who might need a little extra coaching.

- Turnaround Times: This ensures we are hitting project milestones without ever cutting corners on quality.

- Error Categorization: We analyze the types of mistakes being made so we can refine guidelines and stop them from happening again.

This data driven approach allows for proactive quality management, not reactive fixes. By investing in a rigorous, multi layered QA process, you ensure your final training dataset is a true, accurate reflection of the real world. That directly translates into more reliable and powerful AI models.

To see how your current data quality stacks up, consider a professional data annotation assessment to find potential gaps and opportunities for improvement. The insights you gain can be a game changer for refining your AI strategy and making sure your models perform exactly as you expect.

Choosing Between Manual and Automated Annotation

When you get down to it, deciding how to label your data is one of the most important calls you will make. This choice has a direct impact on your project's timeline, budget, and, most importantly, the final accuracy of your AI model.

The debate between manual and automated annotation is not about which is flat out better. It is about what is right for your specific needs finding that sweet spot between accuracy, scale, and the sheer complexity of your data.

The Unmatched Precision of Manual Annotation

There are times when only a human eye will do. When your model needs to grasp nuance, ambiguity, or incredibly fine details, human intelligence is non negotiable. Manual annotation puts your data in the hands of trained professionals who meticulously label everything according to strict guidelines, bringing a level of judgment that algorithms just cannot match yet.

This human led approach is absolutely critical for tasks like:

- Medical Image Analysis: An expert radiologist needs to identify a tiny, subtle abnormality on an MRI. That is not a job for an algorithm alone.

- Sentiment Analysis: How do you teach a machine to detect sarcasm or irony in a customer review? You need a human who understands cultural context.

- Complex Object Recognition: Think of an autonomous vehicle trying to label a partially hidden pedestrian in a crowded city street. A person can infer shapes and relationships in a way that code struggles with.

The market backs this up. In the U.S. AI annotation market, manual annotation holds a commanding 55% market share. It is the go to for its superior accuracy, especially in complex fields like autonomous vehicles (which claim a 28% application share), medical imaging, and retail. You can discover more about these industry trends and the future of AI annotation.

The Power of Automation and AI Assistance

On the other end of the spectrum, what if you have a massive dataset with fairly simple labeling rules? This is where automated annotation tools shine. These systems use pre trained models to do a first pass on the data, which can slash your labeling time from weeks to days.

This approach is perfect when speed and volume are your main concerns. Picture an e commerce company that needs to tag millions of product photos with basic labels like "shirt" or "shoes." An automated tool can chew through that work in a tiny fraction of the time it would take a human team.

Annotation is a challenging, complicated, and iterative process. You need someone who understands the data and who will dare to contradict if things do not make sense. This ensures the ground truth reflects reality, not just a superficial label.

Finding the Right Balance with a Hybrid Approach

For many projects, the most effective strategy is not choosing one or the other it is a hybrid model that blends the best of both. Often called "human in the loop" annotation, this method uses automation for the heavy lifting.

Here is how it works: an automated system does the initial labeling. Then, human experts step in to review, correct, and validate what the machine did. This two stage process catches the algorithm's errors and fine tunes the labels, giving you a final dataset that is both highly accurate and produced efficiently.

This blended approach gives you the scale of automation with the quality guarantee of human oversight, making it a powerful choice for many modern annotation services for machine learning.

How to Select the Right Annotation Service Provider

Choosing a partner for your annotation needs is a massive strategic decision. It goes far beyond just comparing price tags. You are picking the team that will build the very foundation your AI model learns from, so you need a solid framework to evaluate them properly.

This decision is only getting more critical. The global AI annotation market is set to explode from USD 1.96 billion to a staggering USD 17.37 billion by 2034. And even with all the hype around automation, manual annotation is still expected to hold a 41.30% market share because nothing beats it for reliability. With North America leading the charge at 33.2% of the market, new vendors are popping up everywhere, making a careful, deliberate choice more important than ever. You can dig deeper into the trends fueling this growth in this in depth market analysis.

Evaluate Expertise and Specialization

Let us be clear: not all annotation services are the same. A provider who is brilliant at annotating financial documents for text analysis might be completely out of their depth with LiDAR data for an autonomous vehicle. Your first job is to confirm they have real, proven experience in your specific domain and with your kind of data.

When you are talking to a potential partner, ask for case studies or work samples directly related to your industry. For example, if you are in healthcare, ask about their experience with DICOM image annotation. Do they understand medical terminology? This is how you find out if they truly get the nuances of high stakes applications.

Scrutinize Quality Assurance and Accuracy Guarantees

A provider's commitment to quality is completely non negotiable. Do not settle for vague promises of "high quality." You need to see a transparent, robust Quality Assurance (QA) process with contractually guaranteed accuracy levels ideally 99% or higher. Any partner worth their salt will be happy to walk you through their QA methodologies.

A strong QA framework should always include:

- Multi-Layered Reviews: This is key. Look for a mix of consensus models (where multiple annotators label the same data to check for agreement) and expert reviews to catch and fix any errors.

- Transparent Performance Metrics: You should have access to real time data on annotator accuracy, consistency, and throughput. It is a sign they believe in accountability.

- Clear Feedback Loops: There must be a structured process for you to flag edge cases and for them to refine the annotation guidelines. It is a partnership, not a black box.

Confirm Security and Compliance Certifications

Your data is a hugely valuable and often sensitive asset. Handing it over to a third party requires absolute confidence in their security. The provider must show you they have ironclad data protection measures to safeguard your IP and comply with all relevant regulations.

Look for internationally recognized certifications as proof they take this seriously:

- ISO/IEC 27001: This is the gold standard for information security. It ensures your data is handled with rigorous controls from start to finish.

- GDPR/HIPAA Compliance: If you are dealing with personal or medical data, this is a must have. They need to prove they adhere to these critical privacy laws.

- Secure Infrastructure: Ask the tough questions. Are they using secure servers? Encrypted data transfer protocols? What are their access controls for staff?

You have to see this as choosing a long term partner, not just hiring a vendor. You need a team that acts as a true extension of your own one that is fully invested in your project's success and flexible enough to adapt as your needs change.

Assess Scalability and Communication

Your data needs will grow. It is almost a guarantee. The provider you choose must have the capacity to scale their team and infrastructure to meet your demands without letting quality slip. As you evaluate them, talk specifics about how they would ramp up for larger volumes or tighter deadlines.

Just as important is communication. Clear, consistent communication is the hallmark of a professional operation. Look for a dedicated project manager, regular progress reports, and a support system that is actually responsive. And before you sign anything, insist on a pilot project. This trial run is the single best way to test their quality, communication, and workflow in a real world setting. It will tell you everything you need to know about whether they are the right fit for the long haul.

Time to Partner for Annotation Success

Building trustworthy AI starts with one thing: exceptionally high quality training data. Throughout this guide, we have covered the entire annotation playbook, from picking the right techniques to building airtight quality assurance. Now, it is time to put that knowledge into action.

Prudent Partners delivers the precision, security, and scale your AI initiatives need to succeed. Our human in the loop approach ensures the nuanced, contextual understanding required for complex models is baked into every single label. We do not just work for you; we become a seamless extension of your team, dedicated to building the ground truth your algorithms demand.

Your Strategic Data Partner

Choosing an annotation provider is not just a transaction it is a strategic decision that will define the future of your AI capabilities. We focus on building a true partnership, offering far more than just labeling services. We provide the strategic guidance to make sure your data pipeline is efficient, accurate, and ready to grow with you.

Our commitment to 99%+ accuracy is not just a goal; it is a guarantee backed by proven methodologies and a multi layered QA process. That level of precision is non negotiable for models that have to perform in the real world, whether they are powering medical diagnostics or autonomous navigation. To see how we make this happen, take a look at our deep dive on AI quality assurance.

Security and Transparency You Can Count On

We get it your data is one of your most valuable assets. That is why we operate under strict security protocols, validated by our ISO 9001 and ISO/IEC 27001 certifications. These are not just badges on a website; they are your assurance that your sensitive information is handled with the highest standards of confidentiality and integrity.

Transparency is at the heart of how we operate. Our proprietary Prudent Prism platform gives you a clear, real time window into project performance. You can track everything that matters annotator accuracy, throughput, and turnaround times whenever you want.

This complete visibility gives you total confidence in the annotation process, from start to finish.

With deep expertise across every major data type including image, video, LiDAR, text, and audio we are equipped to handle even the most diverse and complex projects. We give AI and ML teams the power to build models that deliver measurable impact and real world results. Let us talk about your project and design a custom data annotation solution to get you to production faster.

Ready to build your AI on a foundation of absolute quality? Contact Prudent Partners today for a custom consultation and pilot project.

Common Questions About Data Annotation

Diving into data annotation can bring up a lot of practical questions. To help you navigate the landscape and make the right calls, here are some straightforward answers to the questions we hear most often.

What’s the Difference Between Data Annotation and Data Labeling?

You will often hear these terms used interchangeably, but there is a small yet important difference.

Data labeling is the simple act of applying a single tag to a piece of data. Think of it as putting a "cat" or "dog" label on an image. It is a basic classification.

Data annotation, on the other hand, is a much broader term. It includes labeling but goes further by adding much more context and detail. This could mean drawing a precise polygon around a tumor in a CT scan or tracking a specific vehicle’s path frame by frame in a video.

In short, all annotation involves some form of labeling, but not all labeling is as detailed as true annotation.

How Is the Cost of Annotation Services Determined?

There is no "one size fits all" price tag for an annotation project. The cost really comes down to a few key factors, because every project has its own unique demands that influence the time and expertise needed.

Here are the main cost drivers:

- Data Complexity: There is a world of difference between drawing simple bounding boxes on product photos and annotating a 3D LiDAR point cloud for a self driving car. The more complex the data, the more intensive the work.

- Required Accuracy: Hitting accuracy targets of 99% or higher is not magic it requires multiple layers of quality assurance. This adds to the cost but is absolutely critical for building a reliable AI model.

- Tooling and Infrastructure: The project might demand specialized annotation software or a highly secure, compliant environment (like one that meets HIPAA standards), which can also affect the final price.

- Volume of Data: It is a game of scale. While bigger projects have a higher total cost, the price per annotation usually drops as volume increases, thanks to the efficiencies we can build into the workflow.

How Do You Ensure Data Security and Confidentiality?

Protecting your data is not just a feature; it is the foundation of everything we do. Any serious annotation partner should have a rock solid security framework, and that starts with globally recognized certifications.

At Prudent Partners, we are ISO/IEC 27001 certified, the gold standard for information security management. This certification is our promise to you that your data is handled with strict access controls, end to end encryption, and comprehensive security protocols from start to finish.

On top of that, we always operate under strict Non Disclosure Agreements (NDAs). For projects with sensitive data, like in healthcare or finance, we are fully equipped to meet specific compliance needs like GDPR or HIPAA.

Can You Handle Large-Scale, Ongoing Annotation Projects?

Absolutely. Scalability is what separates a professional annotation provider from the rest. We are set up to manage high volume, continuous annotation pipelines that keep your model development and retraining efforts moving forward without a hitch.

Our project managers will work with you to design a flexible workflow that can easily scale up or down based on your needs. This ensures you get a steady stream of high quality data, delivered on time, without ever sacrificing accuracy.

Ready to build your AI on a foundation of absolute quality? Prudent Partners delivers the precision, security, and scalability your initiatives demand.

Contact Prudent Partners today for a custom consultation and pilot project.