Annotating an article is the process of turning a wall of text into structured, intelligent data that a machine learning model can learn from. By tagging key elements like people, places, organizations, and even the sentiment behind a statement, you are teaching an AI how to read and interpret context. It is the first and most critical step in building any powerful natural language processing (NLP) application.

The Power of Precise Article Annotation for AI

High-quality annotated articles are the bedrock of sophisticated AI. They power everything from intelligent chatbots that understand user intent to advanced market intelligence tools that can predict stock movements. This process is more than data entry; it is about crafting the foundational logic that allows an algorithm to make sense of messy, unstructured human language.

When an article is properly annotated, it becomes an invaluable training asset. The precision of your labels directly impacts the model's accuracy, reliability, and ultimately, its usefulness in the real world. This is where the true value of what is data labeling comes into focus. Each tag, category, and relationship you define gives the algorithm the context it needs to function.

Without this human-guided structure, a machine learning model sees a news report or a customer review as nothing more than a random jumble of words.

Unlocking Business Intelligence

Consider how a carefully annotated news article could train a financial AI. By tagging company names, executive titles, and the positive or negative tone of any forward-looking statements, the model learns to connect specific events to market reactions. This conversion of qualitative text into quantitative, actionable data allows organizations to automate complex analytical tasks and uncover predictive insights with measurable impact. Similarly, meticulously labeled product reviews are the fuel for e-commerce recommendation engines that provide relevant and personalized suggestions.

Building Reliable AI Solutions

Ultimately, article annotation is the primary step in building dependable and scalable AI. It ensures the data fueling your algorithms is clean, consistent, and perfectly aligned with your business goals. The entire process, from defining your annotation schema to quality-checking the final dataset, directly contributes to how well your AI system will perform, providing a clear return on investment through improved accuracy and efficiency.

Building Your Annotation Project Blueprint

Every successful AI project starts long before the first label is ever applied. It begins with a rock-solid plan, a blueprint that guides every decision. Without one, you risk inconsistent data, expensive rework, and a model that fails to deliver. This plan acts as your project's North Star, ensuring alignment and clarity from start to finish.

First, you must define your goal with absolute clarity. Are you training a model to pull specific drug names and patient outcomes from dense medical journals? Or are you trying to spot company names and track sentiment in financial news? The more specific your goal, the easier it is to design an annotation schema that works and delivers measurable results.

Getting this initial planning phase right is the key to achieving the accuracy levels that drive real business impact.

Defining Your Annotation Schema

An annotation schema is the rulebook for your project. It is the set of labels and definitions your team will use to tag text, and it needs to be the single source of truth for everyone involved. A good schema is incredibly detailed, packed with clear definitions and, most importantly, numerous examples for each entity type.

For instance, a healthcare project analyzing clinical trial data might use entities like these:

- DRUG_NAME: The specific name of a medication being studied (e.g., "Ibuprofen").

- MEDICAL_CONDITION: Any disease or symptom mentioned (e.g., "chronic inflammation").

- PATIENT_OUTCOME: The result of the treatment, categorized as positive, negative, or neutral (e.g., "reduced symptoms").

- DOSAGE: Specific medication quantities and frequencies (e.g., "200mg twice daily").

Creating robust documentation from the beginning eliminates ambiguity down the road. For a deeper dive, review our best practices for creating clear annotation guidelines.

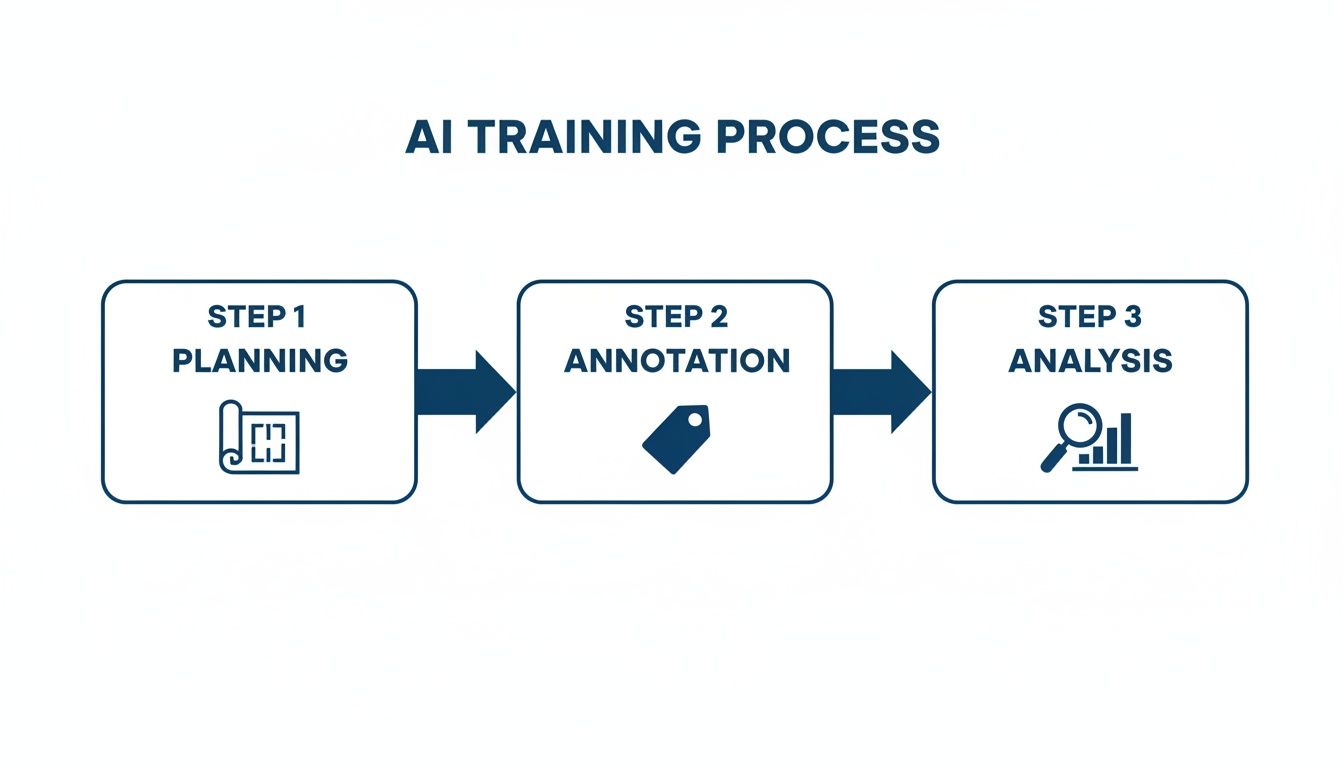

The entire process flows logically from planning through to the final analysis.

This demonstrates that planning is not a one-off task; it is the foundation for everything that follows. Get this right, and both your annotation and analysis will be built on solid ground.

Aligning Labels with AI Goals

The labels you choose are directly tied to what your AI model will ultimately be able to do. If you are building a system to monitor adverse drug reactions, you absolutely need labels for symptoms and side effects. If you are building a model for finance, you will need labels for things like MONEY, ORGANIZATION, and TICKER_SYMBOL.

This alignment is more important than ever. The data annotation tools market was valued at USD 2.32 billion in 2025 and is expected to rocket to USD 9.78 billion by 2030, driven by the explosion in AI adoption. That growth means the demand for precise, well-planned annotation projects is only increasing.

A well-designed project blueprint does more than guide your annotators; it future-proofs your AI investment. By anticipating the nuances your model will need to understand, you build a dataset that is not only accurate but also rich with actionable intelligence.

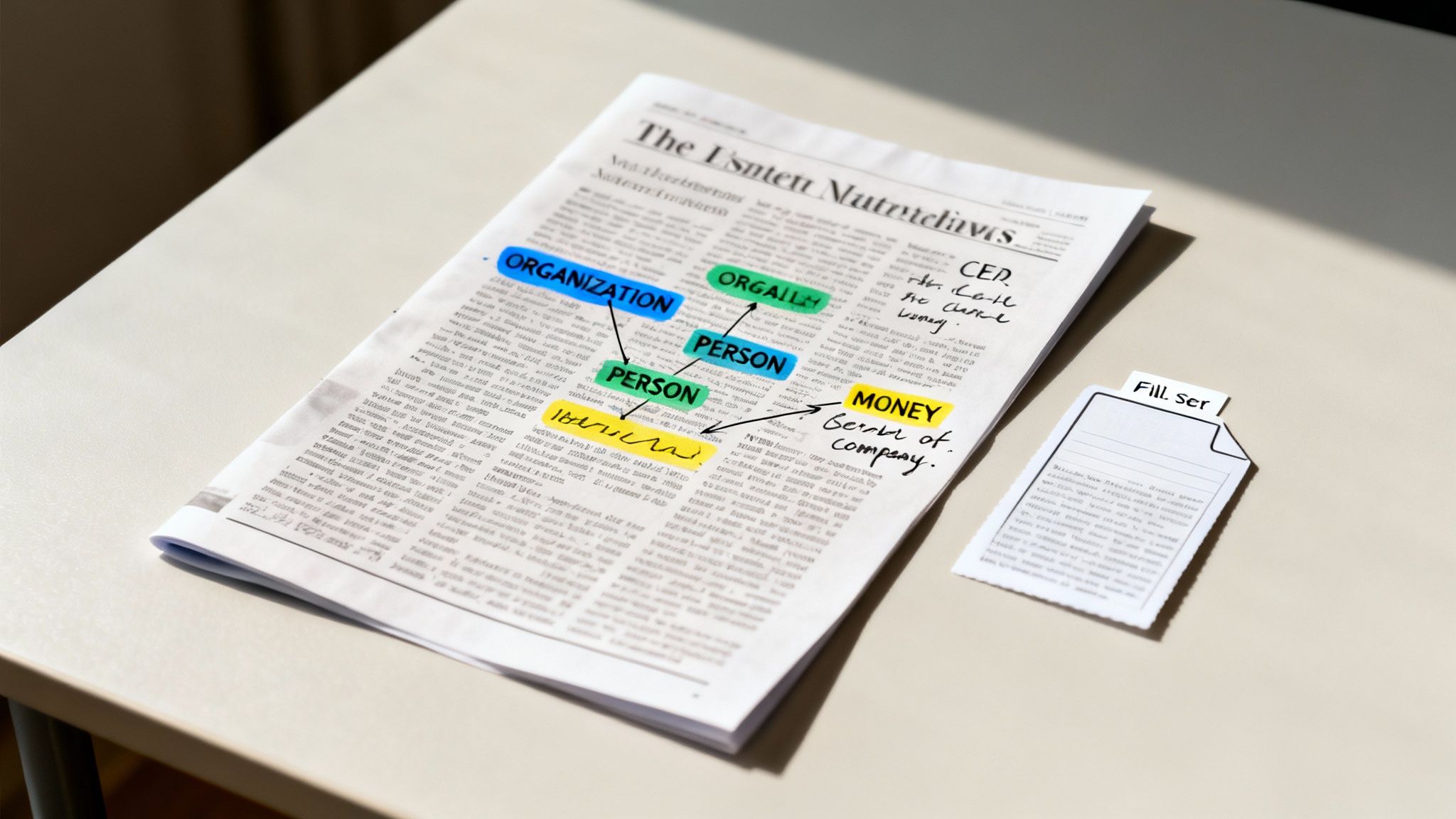

A Hands-On Walkthrough of an Annotated Article

Theory is great, but there is no substitute for seeing how it works in the real world. Let’s shift from planning to practice. By walking through a concrete sample annotation of an article, you can see exactly how abstract rules and labels become structured, machine-readable data.

We will break down a short, fictional news snippet about a corporate merger, applying a simple annotation schema to tag key entities. This is the core process that turns a block of unstructured text into a valuable dataset for training an AI model.

This hands-on example cuts through the jargon and shows the level of precision needed to create high-quality data for Natural Language Processing (NLP).

The Annotation Schema Legend

Before we begin, every annotation project needs a clear set of rules—the schema. Think of it as a legend for a map. It ensures everyone on the team makes the same decision when faced with the same type of data, which is absolutely critical for consistency.

Here is the schema for our example, which defines the labels and their specific meanings.

Annotation Schema for the Sample Article

| Entity Label | Color Code | Definition and Example |

|---|---|---|

| ORGANIZATION | Blue | Names of companies, agencies, or institutions. Example: "Innovate Inc." |

| PERSON | Green | Names of individuals. Example: "Jane Doe" |

| MONEY | Yellow | Monetary values, including currency symbols. Example: "$1.2 billion" |

| DATE | Orange | Specific dates, months, or years. Example: "October 26, 2024" |

With this framework in place, we are ready to start tagging.

Inline Annotated Sample Article

Now, let's apply our schema to the sample text. Each colored highlight directly corresponds to an entity label from our table.

Original Article Text:

“San Francisco, CA – Tech giant Innovate Inc. announced today, October 26, 2024, its definitive agreement to acquire competitor Future Systems for $1.2 billion in cash. The deal, expected to close in the first quarter of next year, was confirmed by Innovate Inc.’s CEO, Maria Zhang. Analysts believe this move will reshape the AI landscape. David Chen, a lead analyst at Market Insights Group, called the acquisition a 'bold strategy' to consolidate market share before the end of the fiscal year.”

And here is the fully annotated version:

San Francisco, CA – Tech giant Innovate Inc. announced today, October 26, 2024, its definitive agreement to acquire competitor Future Systems for $1.2 billion in cash. The deal, expected to close in the first quarter of next year, was confirmed by Innovate Inc.’s CEO, Maria Zhang. Analysts believe this move will reshape the AI landscape. David Chen, a lead analyst at Market Insights Group, called the acquisition a 'bold strategy' to consolidate market share before the end of the fiscal year.

The Rationale Behind Each Annotation

Good annotation is not just about highlighting words; it is about understanding the why behind each tag. This is what separates clean, reliable data from noisy, unusable data.

- ORGANIZATION: We tagged Innovate Inc., Future Systems, and Market Insights Group. Each is a formal corporate or group entity, fitting our definition perfectly.

- PERSON: Maria Zhang and David Chen are clearly individuals. Notice that we did not include their titles ("CEO" or "lead analyst") in the tag itself. Our schema only asks for the name.

- MONEY: The $1.2 billion figure is a straightforward monetary value.

- DATE: Similarly, October 26, 2024, is a specific calendar date that matches our rule.

This inline example gives you a clear visual, but what does the machine actually see?

Exploring the Annotation Data Structure

The highlighted text is great for human review, but an AI model needs something much more structured. The final output is usually a file format like JSON or CSV. JSON is especially popular because it can neatly capture the text, the entity labels, and their exact start and end positions (character indices).

To see what this looks like under the hood, you can [download the sample JSON annotation file here]. This file is what a machine learning engineer would actually feed into a model.

A professional data annotation service will always deliver data in a clean, structured format like this, ready for training without needing extensive prep work from your engineering team.

Ensuring Data Accuracy with a Multi-Layer QA Process

A beautifully annotated article is worthless if the labels are inconsistent or incorrect. Building AI systems you can trust depends entirely on the quality of your training data, which is why a robust, multi-layer quality assurance (QA) process is non-negotiable. This goes beyond a simple spell-check; it is about building a human-centric system of checks and balances that guarantees your dataset is solid.

Effective QA must begin right after the first round of annotation. A single pass is never enough. Human language is full of nuance and ambiguity, and a multi-stage approach ensures multiple sets of eyes review the data. Each layer is designed to catch potential issues the previous one might have missed.

The Core Components of Effective QA

A reliable QA framework integrates several critical stages, each validating the data from a different angle. This layered defense against errors is what separates a mediocre dataset from one that powers exceptional AI.

Our approach typically includes:

- Peer Review Cycles: Annotators review each other’s work against the established guidelines. This first pass is excellent for catching straightforward mistakes and ensuring everyone is adhering to the project schema.

- Consensus Scoring: Two or more annotators label the same article independently. An automated tool then compares their work to calculate an agreement score. Any disagreements get flagged for a manual review, a fantastic way to pinpoint ambiguous spots in the guidelines.

- SME Validation: For specialized content, a Subject Matter Expert (SME) provides the final sign-off. For instance, a medical professional would validate annotations on a clinical trial article to ensure technical accuracy that a general annotator might not catch.

This commitment to quality is crucial. The global AI annotation market is projected to skyrocket from USD 2.3 billion to USD 28.5 billion by 2034, driven by tools that blend human expertise with automation. You can find more insights on this explosive growth at market.us.

Handling Ambiguity and Edge Cases

No set of guidelines, no matter how detailed, can predict every possible scenario. Sooner or later, your team will encounter an ambiguous phrase or an edge case that is not clearly covered. How you handle these moments defines a mature annotation operation.

The goal is not just to fix one annotation. It is to use every ambiguous case as an opportunity to strengthen the project's foundation. This constant cycle of refinement is the secret to achieving accuracy at scale.

When an edge case arises, the best practice is to document it, escalate it to the project lead or SME for a final ruling, and then update the central annotation guidelines with the new example. This creates a living document that evolves with the project, ensuring everyone on the team, present and future, benefits from the clarification. This is a core part of our professional data annotation services; we turn today’s challenges into tomorrow's consistency.

Adapting Annotation for Specialized Industries

A generic annotation schema will only get your AI model so far. To build something truly powerful with industry-specific intelligence, you must adapt your annotation process to the unique language, rules, and goals of that sector.

A sample annotation of an article from the finance world will look completely different from one in healthcare. This is where real value is created, and it is what separates a generic AI from a tool that delivers a measurable business impact. Every industry has its own jargon and critical data points that a standard model would simply overlook.

Annotation in Healthcare and Life Sciences

In healthcare, precision is a requirement, and compliance is paramount. When annotating something as critical as a clinical trial report or a medical journal, the focus must be on identifying highly specific entities that are vital for research and patient safety.

A generic "ORGANIZATION" tag is insufficient. You need granular labels that can differentiate between a pharmaceutical company, a research institution, and a regulatory body like the FDA.

An effective schema for a medical text project would include labels like:

- Adverse Event: Tagging any mention of a negative patient reaction to a treatment.

- Drug Name: Differentiating between brand names and their generic compound names.

- Patient Demographics: Capturing anonymized data like age ranges or specific patient groups.

- Efficacy Outcome: Labeling phrases that describe whether a treatment succeeded or failed.

This detailed approach transforms dense medical texts into structured data for pharmacovigilance, helping teams spot safety signals much faster than any manual review ever could.

Financial Services Annotation for Market Insights

For the financial industry, speed and sentiment are the name of the game. The purpose of annotating news articles, earnings call transcripts, or social media is to get ahead of market trends.

Relationship extraction is absolutely key here. You want to be able to link a CEO's statement directly to their company's stock symbol or pinpoint the sentiment surrounding a new product launch.

Key financial entities often include:

- Ticker Symbol: Identifying stock market symbols (e.g., AAPL).

- Acquisition/Merger: Tagging events related to corporate consolidation.

- Monetary Value: Capturing specific figures related to revenue, profit, or deal sizes.

- Forward Looking Statement: Identifying phrases that make predictions about future performance.

E-commerce Annotation for Customer Understanding

In e-commerce, the voice of the customer is paramount. Annotating thousands of product reviews and feedback forms helps businesses understand exactly what customers love or hate about their products. The focus here is on attribute-based sentiment analysis, linking specific opinions directly to specific product features.

For example, a classic use case is extracting every mention of "battery life" and linking it to a sentiment label like "poor" or "excellent." This gives product development teams direct, actionable feedback from a huge dataset, guiding future improvements in a way that surveys never could. This granularity is what separates a generic AI from one that drives tangible business growth.

The demand for these specialized services is exploding. The global data annotation and labeling market is projected to grow from USD 1.2 billion to USD 10.2 billion by 2034, fueled by the need for high-quality, industry-specific datasets. You can find more insights about this market growth on Global Insight Services.

At Prudent Partners, we help organizations build these custom annotation frameworks from the ground up, ensuring their AI models are trained on data that truly reflects their unique operational world.

Common Questions About Article Annotation

As teams engage with article annotation, a few key questions always arise. Addressing these details from the start is what separates a smooth project from a frustrating one and is essential for achieving your AI goals. Here are some of the most common inquiries we see.

What Is the Difference Between NER and Sentiment Analysis?

It is easy to confuse these two, but they play very different roles in understanding text.

Named Entity Recognition (NER) is about what is being discussed. It is the process of finding and classifying key pieces of information, like tagging "Apple Inc." as an ORGANIZATION or "Dr. Jane Smith" as a PERSON. Think of it as identifying the key actors and subjects in the story.

Sentiment analysis, on the other hand, is about how it is being discussed. This task determines the emotional tone of a piece of text, classifying it as positive, negative, or neutral. In a fully annotated article, you will almost always use both. NER points out the key players, and sentiment analysis tells you how people feel about them.

How Do You Choose the Right Annotation Tool?

There is no single "best" tool; the right one depends entirely on your project's scale, complexity, and how your team works.

- For simple, smaller-scale projects: Open-source tools like Doccano can be a great starting point. They provide essential functionality without significant overhead.

- For large, complex projects: You will want to look at enterprise-grade platforms. Tools like Labelbox or Amazon SageMaker Ground Truth are built for managing complex workflows, quality control, and team collaboration at scale.

When evaluating tools, focus on features that directly support your annotation schema, offer robust QA workflows, and provide flexible data export options. A good tool should enhance your process, not hinder it.

How Do You Handle Disagreements Between Annotators?

First, disagreements are not a problem; they are an expected and valuable part of the process. Language is full of ambiguity. The key is to have a clear system for resolving these moments and using them to make your guidelines stronger.

Our approach is built on consensus. When two annotators disagree, the text gets flagged for a second look by a senior annotator or a subject matter expert (SME). That person makes the final call, aligning it with the project guidelines.

The most important part is that every disagreement is a chance to learn. We treat these cases as opportunities to refine the annotation guide, adding specific examples that clarify the rules and prevent the same issue from happening again. This constant refinement is how you build and maintain quality over time.

What Are Common Pitfalls to Avoid in Annotation?

We have seen a few common missteps that can significantly hurt the quality of your final dataset. One of the biggest is writing vague or incomplete guidelines, which is the number one cause of inconsistent labels. Another is skimping on the quality assurance process, assuming a single review is sufficient.

You also have to watch out for annotator bias and fatigue, which can creep in and degrade accuracy. Finally, be careful not to define labels that are too broad or too granular for what your AI model actually needs. The best defense is always careful planning, clear guidelines, and a disciplined QA process from day one.

At Prudent Partners, we transform complex article annotation challenges into high-quality, AI-ready datasets. Our human-centered approach, backed by a rigorous multi-layer QA process, ensures the precision and scalability your projects demand. We specialize in delivering customized solutions that drive measurable impact and accuracy.

Connect with us to build a data foundation you can trust. Schedule Your Free Consultation with Prudent Partners