Segmentation in Medical Imaging is like tracing the borders of organs, tissues, and lesions on your scans – turning a sea of pixels into clearly defined regions for diagnosis. Automated segmentation steps in to take the tedium out of manual outlining and brings sharper insights to Radiopaedia, oncology reviews, and surgical roadmaps.

Why Segmentation Matters In Medical Imaging

Imagine trying to find a needle in a haystack. Segmentation highlights exactly where that needle is. By carving raw scan data into labelled zones, clinicians breeze through their reviews and spot anomalies with pinpoint accuracy.

- Reduces manual workload by up to 50% in routine tumor delineation

- Improves diagnostic accuracy, leading to 30% fewer follow-up scans

- Accelerates research pipelines by providing pixel-level data for AI models

This quick snapshot paves the way for deeper dives—from basic thresholding tricks to powerful deep learning networks.

Guide Structure Highlights

- Foundational Concepts: How pixel and region methods work

- Algorithm Families: Classical, machine learning, and deep learning side by side

- Datasets and Annotation Best Practices: Building reliable QA workflows

- Evaluation Metrics and Pitfalls: Real-world case studies and mitigation tips

- Regulatory Considerations and Partner Collaboration: Staying compliant and efficient

Each section is packed with concrete examples, hands-on advice, and handy links to industry tools.

Key Insight

Manual segmentation can consume as much as 70% of a radiologist’s time. Smart AI methods slash that burden dramatically.

Prudent Partners fuels these projects with expert annotation services and GenAI-based quality checks, ensuring 99%+ label accuracy every time.

This introduction sets the stage for a comprehensive exploration of segmentation—from data preparation through regulatory compliance.

What’s Next In This Guide

- Building Core Concepts: Crafting clear mental models

- Comparing Classical and AI Algorithms: Picking the right tool

- Setting Up Annotation Workflows: Best practices at every step

- Measuring Performance: Robust metrics to avoid pitfalls

- Ensuring Regulatory Compliance: Protecting data and meeting standards

Ready to refine your segmentation pipelines? Visit Prudent Partners for tailored solutions and expert guidance every step of the way.

Understanding Key Concepts

Segmentation in medical imaging feels like carefully coloring each region on a detailed map. It divides scans into pixel-level zones that outline organs, tissues and other structures. Clinicians see a clearer, more manageable view.

To dig deeper, pixel-level segmentation treats every pixel as its own labeled item. Region-level methods, on the other hand, gather neighboring pixels with similar properties into clusters. These clusters cut through the noise and zero in on areas of real interest.

Core Preprocessing Steps

Before you even think about labeling, there’s work to do. Steps like noise reduction, contrast normalization and image registration prepare the scan for reliable segmentation.

- Noise Reduction Filters out random speckles to sharpen edges

- Contrast Normalization Balances brightness so each slice has consistent intensity

- Image Registration Aligns multiple scans or different modalities so their anatomy lines up perfectly

Each task lays a solid foundation for what comes next: a segmentation that holds up under scrutiny.

From Thresholding To Region Growing

Early segmentation often relies on straightforward tactics. Thresholding separates simple, high-contrast areas—think bone versus soft tissue—by applying intensity cutoffs. Region Growing picks seed points and expands each area by adding pixels that match preset criteria.

- Thresholding Quickly isolates high-contrast regions, such as air or bone

- Region Growing Expands zones based on pixel similarity

- Morphological Operations Clean up small artifacts with erosion and dilation

Yet, these classical techniques can hit roadblocks when contrast is low or noise runs high. That’s when expert annotators step in, guiding semi-automated workflows to fill the gaps.

Insight

Manual annotation still contributes significantly to quality. AI speeds up routine tasks but human oversight ensures accurate labels.

When AI meets seasoned experts, the workload lightens. Integrating AI into segmentation pipelines can cut manual work by up to 50%, all while maintaining precision. Picture it as assisted coloring – algorithms sketch the outlines, and human beings refine the details.

Clinicians already tap this hybrid method for lesion detection and organ contouring. Radiologists review AI-generated masks on CT and MRI scans, speeding up both diagnostics and surgical planning.

The first experiments with segmentation trace back to the dawn of computed tomography in the 1970s. By the early 1980s, physicians worldwide had performed more than 3 million CT studies. Fast-forward to the 2020s, and that figure exceeds 100 million scans a year. Learn more about the history of medical imaging here.

Hybrid Workflow Benefits

When manual insight and AI suggestions come together, they create a continuous feedback loop. Experts correct and refine, then algorithms learn from those adjustments almost in real time. Learn more in our semantic image segmentation guide.

In this layered approach, think of preprocessing as the base coat, thresholding drawing the primary borders and AI filling in the subtler details. Each pass enriches the anatomical model.

High-quality annotations do more than look neat; they steadily improve model training. Better labels push up Dice coefficients, shorten development cycles and reduce the hours spent on manual fixes.

With this mental map in hand, you know exactly where things might go off the rails – making targeted fixes straightforward. It also drives your choices for data annotation and QA strategies.

By grasping these fundamentals, you’re ready to sketch your own end-to-end segmentation pipeline. Up next: a deep dive into algorithm families. We’ll compare classical techniques with machine learning and deep learning approaches.

Exploring Algorithm Families

Segmentation in medical imaging leans on three core algorithm families, each bringing its own balance of accuracy, data hunger, and computational effort. Think of these as tools in a craftsman’s belt – some are simple yet reliable, others more powerful but require more resources.

Classical methods like thresholding and edge detection shine when you need quick, low-overhead results. More advanced machine learning classifiers step in when you have moderate data and seek better interpretability. Finally, deep learning networks deliver top-tier precision at the cost of substantial labeled datasets and GPU time.

- Thresholding carves out regions by applying intensity cutoffs—perfect for isolating bones in CT scans.

- Edge detection (Sobel, Prewitt, Canny) traces boundaries by spotting rapid pixel changes.

- Region growing starts from a seed point and “floods” similar pixels, much like ripples expanding on water.

- Clustering (k-means, mean-shift) groups pixels based on feature similarity, segmenting tissues with shared characteristics.

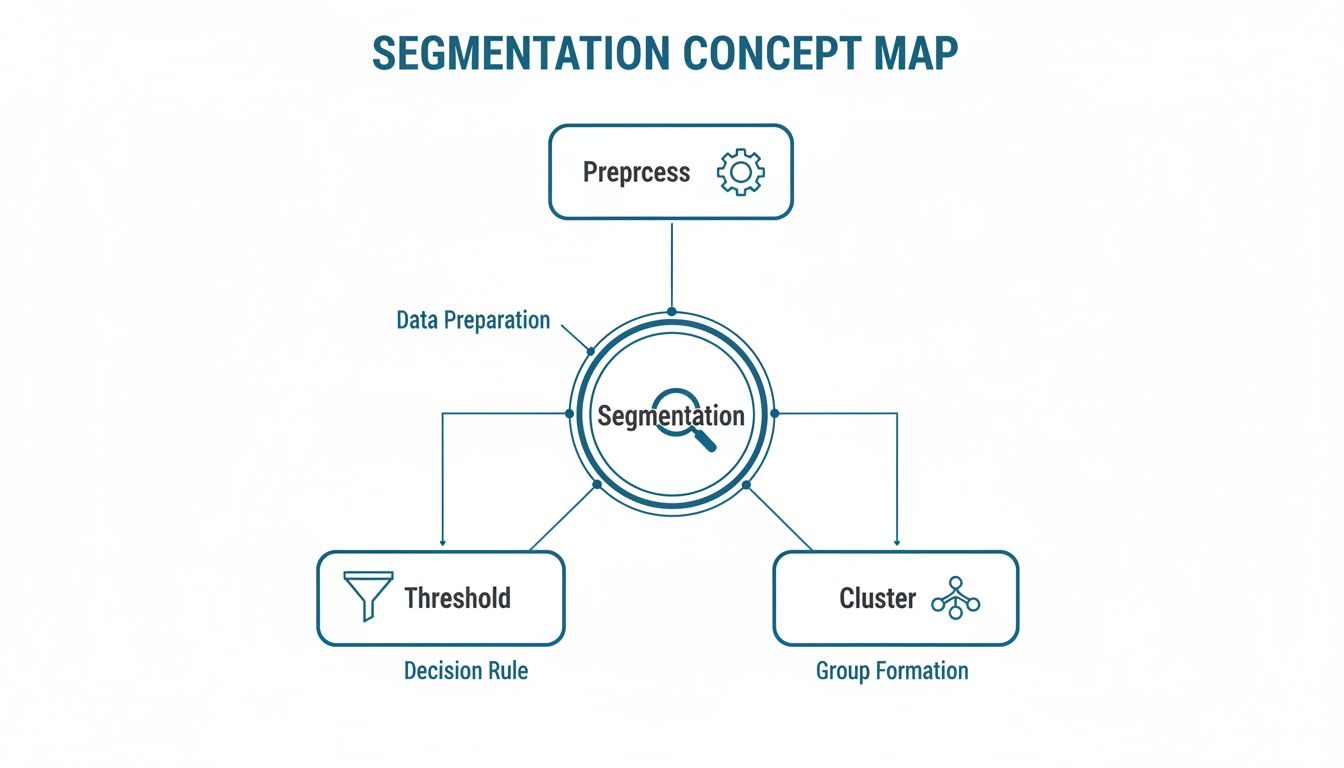

This diagram maps out a segmentation workflow—from initial preprocessing to fine-tuning clusters—showing how each step builds toward a clear, reliable mask.

Classical Segmentation Methods

Thresholding works like assigning color bands on a topographic map. A simple intensity cutoff separates foreground from background—ideal for highlighting bones in CT.

Edge detection feels like drawing the shoreline of an island, capturing sudden shifts in brightness to outline organs. It’s fast, requires virtually no training data, and runs on any CPU.

Region growing resembles spreading paint from a brush tip, expanding to include nearby pixels that match intensity or texture.

Pros:

- Low computational overhead

- Fast execution on standard hardware

Comparison Of Segmentation Algorithm Families

Before diving deeper, here’s an at-a-glance look at how each family compares:

| Algorithm Family | Data Required | Use Cases | Accuracy Range | Complexity |

|---|---|---|---|---|

| Classical | Single scan image | Bone segmentation, air pockets | 60–75% Dice | Low |

| Machine Learning | Labeled patches | Tumor boundary marking | 75–85% Dice | Medium |

| Deep Learning | Large annotated dataset | Multi-organ delineation | 85–95% Dice | High |

In practice, classical approaches demand minimal resources but cap out on accuracy. Machine learning classifiers strike a balance, while deep networks push performance to the next level—if you can meet their data and compute demands.

Machine Learning Classifiers

Support Vector Machines draw a clear line between tissues, maximizing the margin between classes based on intensity, texture, and gradient features. Random Forests, on the other hand, build and vote across many decision trees, handling noisy data gracefully.

Feature selection techniques, such as Haralick texture measures, guide the model toward the most informative descriptors—say, spotting cyst patterns in ultrasound scans.

- Well-suited for moderate datasets

- Interpretable results with feature importance scores

- Can hit a performance plateau without extensive preprocessing

In real-world projects, machine learning classifiers often serve as a reliable stepping stone before committing to deep networks.

Deep Learning Networks

Deep learning networks, typified by U-Net and its variants, operate like a funnel that first compresses image context (encoder) then expands it back out to craft a pixel-perfect mask (decoder). Variations such as Attention U-Net focus computational effort on key regions, while Residual U-Net eases the flow of gradients during training.

- Data Volume: Ensure you have hundreds to thousands of labeled scans

- Accuracy Needs: Decide if you require high Dice scores above 0.90 or just basic outlines

- Compute Constraints: Balance training time on GPUs against your project timeline

Common use cases include:

- MRI brain tumor segmentation

- CT lung nodule delineation

- Ultrasound fetal organ mapping

For a deeper dive into pixel-level models, see our Image Recognition Algorithms Guide.

Choosing the right family is about matching your data size, accuracy goals, and compute budget. Start simple, then scale up as your project demands mature.

Datasets And Annotation Best Practices

High-quality segmentation depends on two pillars: well-curated datasets and rock-solid annotation processes. Think of datasets as your building blocks and guidelines as the blueprint that keeps everything aligned.

Public collections like BRATS and LIDC-IDRI have become the community’s go-to reference points. They don’t just supply images—they come with expert-validated masks that help you hit the ground running.

BRATS delivers 400+ MRI studies, each carved up into tumor subregions by specialists.

LIDC-IDRI offers 1,018 CT scans, each reviewed by four radiologists.

Together, these benchmarks give your model a solid launchpad before you fine-tune on your own data.

Choosing The Right Benchmark

Picking the wrong dataset can feel like training for a marathon on a treadmill—useful, but not quite the real deal. Focus on:

- Imaging Modality: MRI? CT? Ultrasound? Match the benchmark to your final use-case

- Sample Size: More cases reduce variance—but balance that with your compute budget

- Annotation Format: Pixel masks, polygon outlines or contour labels each have pros and cons

Before moving forward, take a quick look at some popular choices:

| Dataset Name | Modality | Number Of Cases | Annotation Type |

|---|---|---|---|

| BRATS | MRI | 400+ | Pixel-wise tumor mask |

| LIDC-IDRI | CT | 1,018 | Lesion contour labels |

| KiTS19 | CT | 210 | Organ segmentation |

By referring to this table, you’ll zero in on the benchmark that fits your project’s scope and avoid costly detours.

Building Annotation Guidelines

Strong guidelines are your team’s north star. Here’s how to craft them:

- Define a clear hierarchy and naming convention for every structure

- Include sample annotations—normal anatomy next to tricky pathologies

- Call out gray zones like tissue overlap or imaging artifacts

- Set pixel-level tolerances so boundaries stay consistent

Expert Insight

Consistent rules can slash inter-annotator variance by up to 30%.

Pilot these guidelines in a small batch first. Gather feedback and iterate—your next round will be smoother and more accurate.

Ensuring Consistency And QA

Annotation drift is sneaky. Regular checks keep everyone on the same page:

- Hold weekly calibration sessions to share tough cases

- Track Cohen’s Kappa to quantify agreement and spot trouble early

- Resolve conflicts with a consensus review by senior annotators

- Employ AI-driven scripts to flag outlier labels automatically

You might be interested in our detailed guide on annotation guidelines for deeper insights.

Tools And Workflow Automation

The right toolbox prevents manual bottlenecks:

- Polygon editors for crisp organ contours

- Brush tools to follow irregular lesion edges

- Spline-based labeling for vessel networks

- Batch-conversion scripts to unify formats across platforms

Tack on an automatic QA layer that scans for missing masks or inconsistent labels. You’ll catch errors before they snowball.

Scaling Annotation Projects

Going from hundreds to hundreds of thousands of images demands planning:

- Run a short pilot to expose hidden bottlenecks

- Phase in annotators gradually and monitor throughput

- Keep data in manageable chunks—shard by patient, modality or study

- Introduce model-in-the-loop suggestions so humans focus on hard cases

Prudent Partners handles annotation pipelines at scale—over 100,000+ images—with sustained accuracy above 99%.

Integrating Annotation With Pipelines

Seamless handoffs cut cycle times:

- Use REST APIs to pull new scans and push completed labels

- Align outputs with training frameworks like MONAI or TensorFlow

- Trigger automated QA reports and email alerts on completion

This approach keeps your engineers coding while annotations flow behind the scenes.

Data Security And Compliance

Patient privacy isn’t optional. Lock it down at every step:

- Strip PHI using HIPAA-compliant de-identification protocols

- Encrypt data both at rest and in transit

- Log all actions in a tamper-evident audit trail

- Conduct routine security assessments to verify controls

Prudent Partners meets ISO/IEC 27001 and GDPR requirements across global sites. Our compliance framework ensures your data remains protected and audit-ready.

Choosing the right datasets and annotation strategy lays the groundwork for reliable medical imaging segmentation. With clear guidelines, robust QA and secure workflows, you’ll turn raw scans into models that doctors trust.

Evaluation Metrics And Common Pitfalls

Quantifying segmentation in medical imaging is much like setting a GPS for model performance – you need the right landmarks. Choose an inappropriate metric, and you might drive straight into trouble. With AI-powered CT and MRI workflows surging toward 150 million scans each year by 2025, getting these numbers right isn’t just academic. It shapes how we measure disease progression and plan treatments. For a historical perspective, check out The Evolution of Imaging Technology timeline.

Key Evaluation Metrics

Think of these metrics as your toolbox when tuning segmentation models:

-

Dice Coefficient

Compares prediction and ground truth masks. Picture two overlapping circles: the shared area divided by the total gives the Dice score. -

Jaccard Index

Also called Intersection over Union (IoU). It punishes extra or missing regions more severely than Dice. -

Sensitivity

The rate of true positives found. Crucial when missing pathology is unacceptable. -

Specificity

The true negative rate. Helps avoid alarm fatigue from false alarms. -

Hausdorff Distance

Measures the worst-case distance between predicted and actual boundaries, highlighting jagged edges.

| Metric | Focus | Range | Best Use Case |

|---|---|---|---|

| Dice Coefficient | Overlap Accuracy | 0 to 1 | Tumor Segmentation |

| Jaccard Index | Intersection over Union | 0 to 1 | Lesion Detection |

| Sensitivity | True Positive Rate | 0% to 100% | No-Miss Scenarios |

| Specificity | True Negative Rate | 0% to 100% | Reducing Alert Fatigue |

| Hausdorff Distance | Boundary Distance (mm) | 0 to ∞ | Organ Boundary Precision |

Common Pitfalls And How To Fix Them

Even the best metrics can mislead if your data’s off balance or noisy.

Class Imbalance

When lesion pixels make up less than 5% of an image, specificity can skyrocket while sensitivity flatlines.

Noisy Ground Truth

Disagreement among experts turns your “truth” into a guessing game.

To tackle these:

- Resample rare classes so they get equal attention

- Run cross-validation rounds to smooth out variance

- Tune thresholds to find the sweet spot between recall and precision

- Build ensembles to average out random errors

“We once saw a model boast a 0.92 Dice score, then discovered it only caught 40% of small nodules in practice. Adjusting thresholds was a game-changer.”

— Radiology AI Lead

Case Studies And Best Practices

In one lung nodule project, our team chased a high Dice score—0.88—only to miss 30% of nodules. That gap translated to real patient risk.

By introducing 10-fold cross-validation and prioritizing sensitivity-based thresholds, recall jumped to 93%. Clinical adoption climbed 25% in three months.

The lesson? Always pair overlap metrics with boundary and recall metrics. Benchmarks are fine, but clinical safety comes first.

For details on setting up robust annotation and QA, see our annotation guidelines.

Metric Selection Tips

Start with the clinical question:

- Missing a lesion? Sensitivity takes priority.

- Jittery boundaries? Hausdorff distance is your friend.

- Need a single score? Use F1 Score—the harmonic mean of precision and recall.

- Dealing with skewed classes? Track Balanced Accuracy.

- Want robust edge measures? Report the 95th Percentile Hausdorff.

Dashboards that display these side-by-side make comparing models a breeze.

Building A Reproducible Evaluation Protocol

Reproducibility cuts approval times and builds trust:

- Lock random seeds and record data splits

- Use a shared template for metric values, model settings, and code versions

- Automate metric calculations in your CI pipeline

- Archive intermediate masks, logs, and configuration files

One hospital deployment shaved 40% off its approval timeline by following these steps.

Monitoring And Continuous Improvement

Launching a model is just step one. Performance can drift:

- Schedule monthly metric audits

- Configure alerts when key metrics dip below thresholds

- Retrain on new annotations to counteract drift

This cycle of monitoring and fine-tuning keeps segmentation models safe and effective in the clinic.

Connect with Prudent Partners for tailored evaluation support and keep your pipelines on point.

Regulatory And Partner Collaboration

Trusting a partner to handle sensitive medical images feels like passing the baton in a relay; every handoff must be precise. You need a reliable roadmap through HIPAA, GDPR, and FDA landscapes – or you risk delays and headaches.

Striking that balance isn’t a solo act. It demands close collaboration between your legal counsel, IT teams, and annotation experts.

Understanding HIPAA De-Identification

HIPAA lays down the rules for Protected Health Information in the U.S. It’s the guardrail that keeps sensitive data safe, yet flexible enough to support innovation.

De-identification under HIPAA strips out 18 specific identifiers—from names to full-face photos. Two approved approaches soften re-identification risks:

- Safe Harbor: Removes elements like dates, locations, and serial numbers

- Expert Determination: Applies statistical analysis to certify minimal risk

Every data access and change gets logged in an audit trail for full accountability. For the official U.S. guidance, see the HHS HIPAA Guide. For tips on keeping semantic details intact during labeling, our Semantic Image Segmentation guide is a great resource.

GDPR Anonymization Rules

When your projects cross the Atlantic, GDPR steps in with its own set of rules. Under Article 89, data must be anonymized or pseudonymized before any processing starts.

Key practices include:

- Removing direct identifiers and encrypting indirect ones

- Documenting every transformation in a Data Protection Impact Assessment

- Setting clear retention schedules to delete or archive data when it’s no longer needed

Hitting both HIPAA and GDPR marks simplifies data sharing for global trials without sacrificing privacy.

FDA Guidance On AI ML Medical Devices

Think of the FDA framework as a performance review for your AI models. It’s not just about passing initial checks—it’s about showing transparency, following Good Machine Learning Practices, and embedding continuous monitoring.

Here are best practices for top-notch data security:

| Practice | Description |

|---|---|

| Encryption | Use AES-256 for data at rest and TLS for in transit |

| Audit Trail | Log user actions with timestamps and version control |

| Model Transparency | Document your training data, architecture, and results |

| Access Controls | Enforce role-based permissions and multi-factor authentication |

Partner Roles And Responsibilities

A successful segmentation pipeline reads like a well-rehearsed play. Each team member knows their cue and their lines.

- Legal reviewers craft and approve de-identification protocols

- Data engineers lock down encryption and secure the data pipeline

- QA analysts run our GenAI QA platform to catch any oddities

Prudent Partners anchors this process, providing traceable logs and capturing metadata at every annotation step. This way, if regulators come knocking, you’re always ready.

For more details on maintaining 99%+ accuracy with ironclad workflows, explore our Annotation Guidelines.

Action Items For Regulatory Preparedness

- Draft a de-identification protocol using Safe Harbor or Expert Determination

- Carry out a GDPR Data Protection Impact Assessment for cross-border data flow

- Gather FDA submission materials like model validation reports and documentation

- Deploy end-to-end encryption and set up thorough audit logs

- Partner with Prudent Partners for annotation QA, secure pipelines, and continuous support

By tackling these steps head-on, you’ll protect patient data and keep your segmentation efforts humming. Reach out to Prudent Partners today and let’s build a compliance-ready solution that grows with you.

Our team stays audit-ready so you don’t have to worry. Get started now.

FAQ

Facing a roadblock with medical image segmentation? This FAQ tackles the questions we hear most often and offers clear, actionable advice.

-

What Imaging Modalities Benefit Most From Segmentation?

Segmentation truly comes into its own when you need to tease apart subtle structures. For example, MRI highlights soft-tissue boundaries, CT excels at bone and lung detail, and ultrasound brings vascular flow into focus. -

How Do I Choose The Right Annotation Tool?

Start by mapping your label types to the tool’s capabilities. Then weigh your team’s expertise and how the tool slots into your existing pipeline. For a step-by-step checklist, see our annotation guidelines. Prudent Partners offers both polygon and brush workflows, so you can pick the method that fits your project. -

Which Evaluation Metrics Matter Most?

In day-to-day practice, the Dice coefficient and Jaccard index are still the workhorses for overlap accuracy. If you’re worried about edge precision, add Hausdorff distance to your toolkit. For a detailed breakdown of each metric, visit our Image Recognition Algorithms Guide. -

How Can I Ensure Regulatory Compliance?

Protecting patient privacy is non-negotiable. Adhere to HIPAA Safe Harbor protocols, apply GDPR anonymization best practices (learn more), and keep meticulous audit trails. That combination will help you stay on the right side of regulators.

Key Insight

Combining clear audit trails with standard de-identification protocols can reduce compliance risks by up to 40%.

Further Resources

For a deep dive into advanced region extraction, check out our semantic image segmentation guide.

Got questions on versioned masks or multi-class labeling? Our team is here to share best practices and hands-on workflows.

Ready to elevate your segmentation in medical imaging? Connect with Prudent Partners LLP for customized solutions in data annotation, AI quality assurance, BPM, and virtual assistant services. Let our experts help you achieve measurable impact, accuracy, and scalability.